Enterprises nowadays are keen on adopting a microservices architecture, given its agility and flexibility. Containers and the rise of Kubernetes — the go-to container orchestration tool — made the transformation from monolith to microservices easier for them.

However, a new set of challenges emerged while using microservices architecture at scale:

- It became hard for DevOps and architects to manage traffic between services

- As microservices are deployed into multiple clusters and clouds, data goes out of the (firewall) perimeter and is vulnerable; security becomes a big issue

- Getting overall visibility into the network topology became a nightmare for SREs.

Implementing new security tools, or tuning existing API gateway or Ingress controllers, is just a patchwork and not a complete solution to solve the above problems.

What architects need is a radical implementation of their infrastructure to deal with their growing network, security, and observability challenges. And that is where the concept of service mesh comes in.

What is a service mesh?

A service mesh decouples the communication between services from the application layer to the infrastructure layer. The abstraction at the infrastructure level happens by proxying the traffic between services (see Fig. A).

Fig A – Service-to-service communication before and after service mesh implementation

The proxy is deployed alongside the application as a sidecar container. The traffic that goes in and out of the service is intercepted by the proxy and it provides advanced traffic management and security features. On top of it, service mesh provides observability into the overall network topology.

In a service mesh architecture, the mesh of proxies is called the data plane, and the controller responsible for configuring and managing the data plane proxies is called the control plane.

Why do you need a service mesh for Kubernetes?

While starting off, most DevOps only have a handful of services to deal with. As the applications scale and the number of services increases, managing the network and security becomes complex.

Tedious security compliance

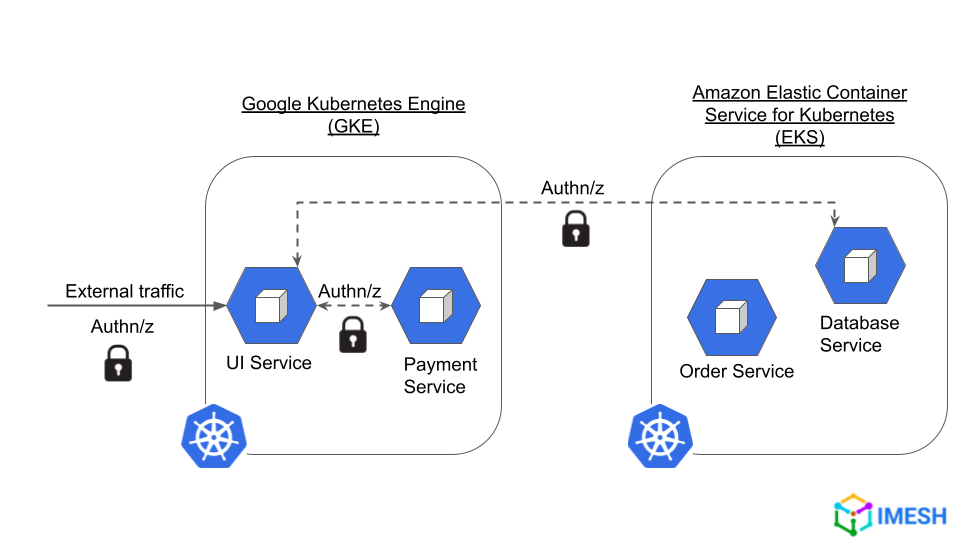

Applications deployed in multiple clusters from different cloud vendors talk to each other over the network. It is essential for such traffic to comply with certain standards to keep out intruders and to ensure secure communication.

The problem is that security policies are typically cluster-local and do not work across cluster boundaries. This points to a need for a solution that can enforce consistent security policies across clusters.

Chaotic network management

DevOps engineers would often need to control the traffic flow to services — to perform canary deployments, for example. And they also would want to test the resiliency and reliability of the system by injecting faults and implementing circuit breakers.

Achieving such kinds of granular controls over the network requires DevOps engineers to create a lot of configurations and scripting in Kubernetes and the cloud environment.

Lack of visualization over the network

With applications distributed over a network and communications happening between them, it becomes hard for SREs to keep track of the health and performance of the network infrastructure. This severely impedes their ability to identify and troubleshoot network issues.

Implementing service solves the above problems by providing features, which make managing applications deployed to Kubernetes painless.

Key features of service mesh in Kubernetes

Service mesh acts as a centralized platform for networking, security, and observability, for microservices deployed into Kubernetes.

Centralized security

With a service mesh, security compliance is easier to achieve as it can be done from a central plane instead of configuring it per service. A service mesh platform can enforce consistent security policies that work across cluster boundaries.

Service mesh provides granular authentication, authorization, and access control for applications in the mesh.

- Authentication: mTLS implementation, JWT

- Authorization: Policies can be set to allow, deny, or perform custom actions against an incoming request

- Access control: RBAC policies that can be set on method, service, and namespace levels

Advanced networking and resilience testing

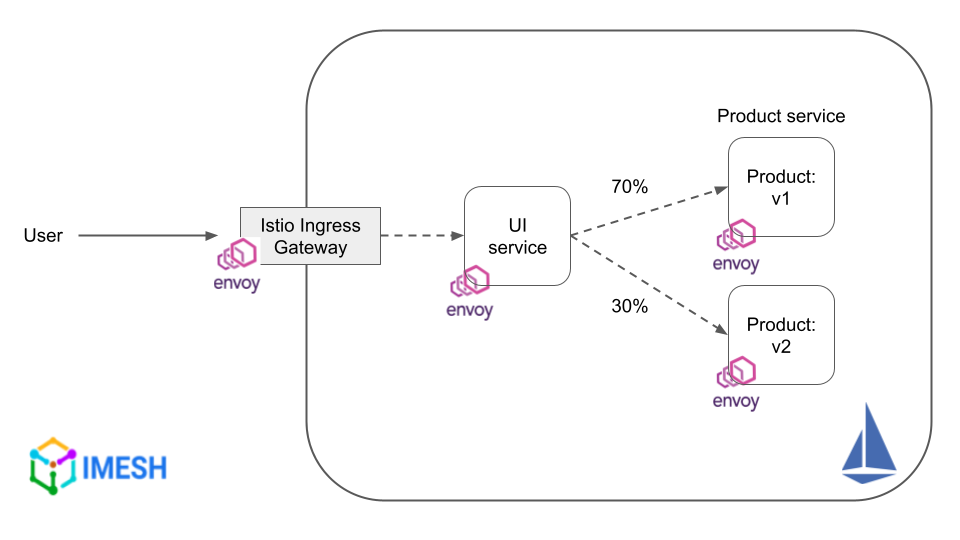

Service mesh provides granular control over the traffic flow between services. DevOps engineers can split traffic between services or route them based on certain weights.

Besides, service mesh provides the following features to test the resiliency of the infrastructure with little work:

- Fault injection

- Timeouts

- Retries

- Circuit breaking

- Mirroring

Unified observability

Implementing service mesh helps SREs and Ops teams to have centralized visibility into the health and performance of applications within the mesh. Service mesh provides the following telemetry for observability and real-time visibility:

- Metrics: To monitor performance and see latency, traffic, errors, and saturation.

- Distributed tracing: To understand requests’ lifecycle and analyze service dependency and traffic flow.

- Access logs: To audit service behavior.

Top service mesh software to consider for Kubernetes

One may find various service mesh software such as Istio, Linkerd, HashiCorp Consul, Kong KUMA, Google Anthos (built on Istio), VMware Tanzu, etc., in the market.

However, over 90% of the users either use Istio or Linkerd service mesh software because of their strong and vibrant open-source ecosystem for innovation and support.

Istio

Istio is the most popular, CNCF-graduated open-source service mesh software available. It uses Envoy proxy as sidecars, while the control plane is used to manage and configure them (see Fig.B).

Fig B – Istio sidecar architecture

Istio provides networking, security, and observability features for applications at scale. Developers from Google, Microsoft, IBM, and others actively contribute to the Istio project.

We have compiled an Istio deep dive document that covers Istio’s architecture, components, features, and benefits. Get the free document if you would also like to see how Envoy proxy sidecar injection works, and how it manipulates iptables and abstracts networking in Kubernetes systems: Deep Dive into Istio Architecture and Components.

Linkerd

Linkerd is a lightweight, open-source service mesh software developed by Buoyant. It provides the basic features of a service mesh and has a destination service, identity service, and proxy injector (see Fig.C).

Fig C – Linkerd architecture

More than 80% of the contributions to Linkerd are by the founders, Buoyant, itself.

(To see a detailed comparison between the two and choose one for your Kubernetes deployments, head to Istio vs Linkerd: The Best Service Mesh for 2023.)

Benefits of service mesh for Kubernetes

Below are some benefits enterprises would reap by implementing service mesh in Kubernetes.

100% network and data security

Service mesh helps in maintaining a zero trust network where requests are constantly authenticated and authorized before processing. DevOps engineers can implement features such as mTLS, which works cluster-wide and across cluster boundaries (see Fig.D).

Fig D – Zero trust network implementation

A zero trust network helps in maintaining a secure infrastructure in the current dynamic threat landscape filled with attacks, like man-in-the-middle (MITM) and denial of service (DoS).

(If you are interested to learn more, check out this article: Zero Trust Network for Microservices with Istio.)

80% reduction in change failure rate

Nowadays, enterprises release applications to a small set of live users before a complete rollout. It helps DevOps and SREs to analyze the application performance, identify any bugs, and thus avoid potential downtime.

Canary and blue/green deployments are two such deployment strategies. The fine-grained traffic controls — including splitting traffic based on weights (see Fig.F) — provided by service mesh make it easier for DevOps engineers to perform them.

Fig F – Canary deployment with Istio service mesh

(Learn how to perform canary release with Istio and Argo Rollouts: How to implement Canary for Kubernetes apps using Istio.)

99.99% available and resilient infrastructure

The telemetry data provided by service mesh software helps SREs and Ops teams to identify and respond to bugs/threats quickly.

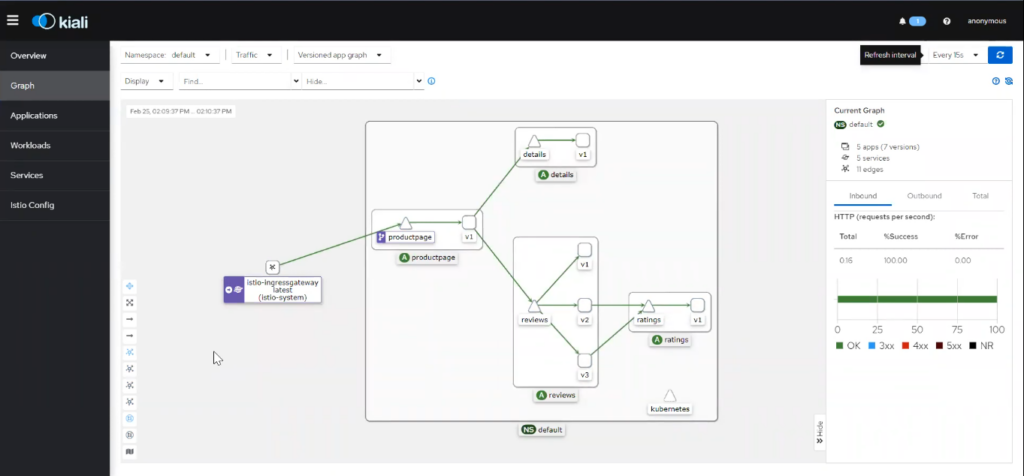

Most service mesh software integrate with monitoring tools like Prometheus, Grafana, Kiali, Jaeger, etc., and the dashboard (see Fig.G) provided by them helps operators visualize the health, performance, and behavior of the services.

Fig G – Kiali service graph

Apart from that, DevOps engineers can check the fault tolerance of the system by manually injecting errors and thus simulating various failure scenarios.

(Watch how to configure Istio with Prometheus and Grafana: Configuring Istio with Prometheus | Grafana | Metrics Monitoring)

5x improvement in developer experience

Most application developers do not enjoy configuring the network and security logic in their applications. Their focus tends to be on business logic and building features.

Implementing service mesh reduces developer toil as they are left alone with the application code. They can offload network and security configurations completely to the service mesh at the infrastructure level. The separation helps developers focus on their core responsibilities, i.e., delivering the business logic.

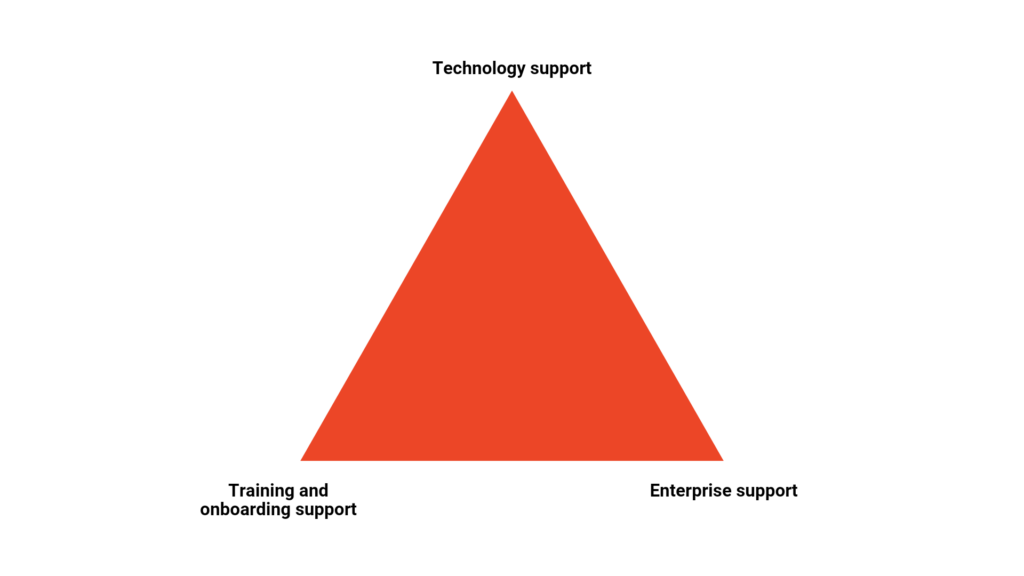

3 pillars for successful implementation of service mesh

Since service mesh is a radical concept, it can be overwhelming for enterprises to successfully implement and realize its value. If you are an architect or CIO, you would want to consider the following 3 pillars for successful service mesh implementation.

Technology support

It is important to evaluate a service mesh software from the technology support perspective. If you are a mature DevOps organization using various open source and open standards in your CI/CD process, ensure that service mesh software integrates well with your CI/CD tools (of whatever versions).

For example, if you are using Argo CD for GitOps deployment, or Prometheus for monitoring, then a service mesh software must be able to integrate with less intervention.

Enterprise Support

Open-source software adoption is on the rise; software support is a prime necessity for enterprises to make sure their IT is available for the business.

- Evaluate a service mesh software that is backed by a large community of members (great for support).

- Ensure there are 3rd party vendors that can provide 24*7 support with fixed SLA.

- If you plan to go with Istio and feel stuck at any point, go through this Istio service mesh vendor comparison guide for enterprises.

Training and Onboarding support

Ensure there are adequate reading materials, documents, and videos available, which will supplement the learning of users of service mesh software. Because it would not make sense if internal employees such as DevOps and SREs are not able to adopt it.

Finally, a service mesh is not just software but is an application operation pattern. Do not hasten your project. Rather, research and evaluate the best service mesh that suits your organizational requirements and needs.

Service mesh is the way forward for Kubernetes workloads

The goal of service mesh implementation is to make managing applications deployed in Kubernetes easier. Adopting it will become a necessity as services start to overflow from a single cluster to multiple clusters. With Kubernetes adoption on the rise, service mesh will eventually become a critical component in most organizations.