We explained the two Kubernetes custom resources — virtual services and destination rules — that form the core of Istio’s traffic management and network resiliency features. We described what they are and how they work.

(Read about them here in case you missed it: What are Istio Virtual Services and Destination Rules?)

Virtual services and destination rules help DevOps engineers and cloud architects apply granular routing rules and direct traffic around the mesh. Besides, they provide features to ensure and test network resiliency, so that applications operate reliably. In this article, we will explore both the features of Istio: traffic routing and network resilience testing.

Traffic routing in CI/CD with Istio

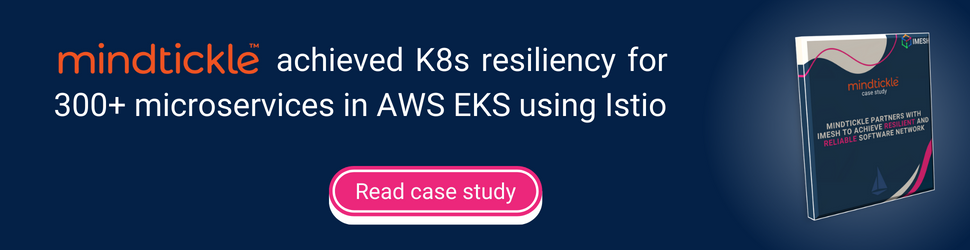

Istio can split traffic between service or service subsets with ease. Traffic splitting is done based on weights/percentages (refer to the image below) defined in the corresponding virtual service and destination rule resources.

Traffic splitting between different versions of a service using Istio

Istio’s traffic splitting capability helps DevOps and cloud architects have granular control over how traffic is routed to different versions of a service. The feature is useful, especially in performing canary or blue/green deployments.

Canary deployments with Istio

Canary deployments is a software release strategy where only a fraction of live traffic is routed to the newly released software or service. If the performance and quality of the new version is stable like the existing version, more traffic is routed to the new version and the older one is phased out gradually.

Canary deployments allow controlled release and help organizations minimize the impact of potential bugs or issues during releases.

Istio provides two ways to carry out canary rollouts:

- Istio routes traffic to canary and the stable one that are deployed as two different services.

- Istio routes traffic to the canary and the stable one that are both subsets of a single application.

We have covered a tutorial on implementing canary using Istio and Argo CD Rollouts. Check it out here: How to implement Canary for Kubernetes apps using Istio.

Blue/green deployments using Istio

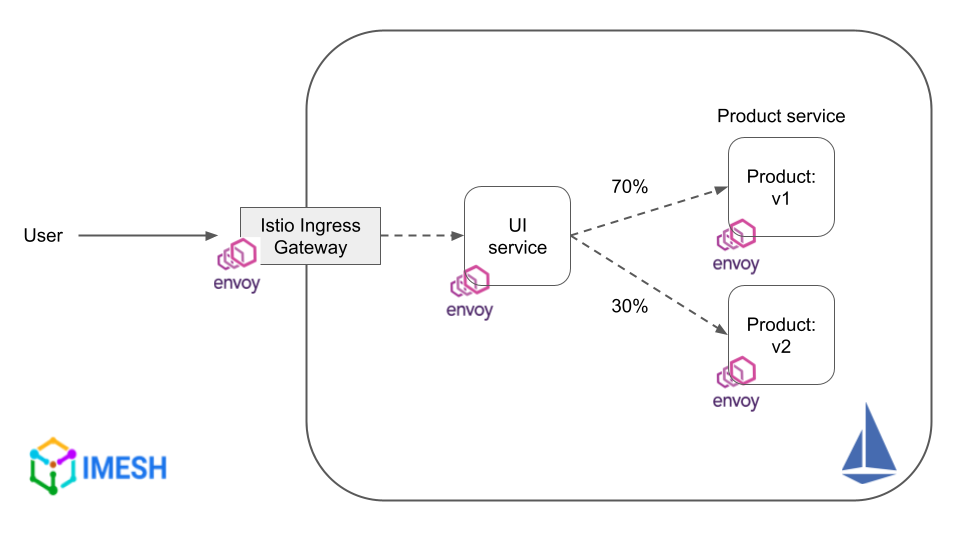

Blue/green deployment is another progressive delivery strategy where a new version (green) of a service runs in parallel to the existing version (blue). Here, the load balancer switches production traffic to the new version (refer to the image below), and is rolled back to the older version in case of any issues with the new release.

Blue/green deployment with Istio

Blue/green deployments help minimize application downtime by providing the ability to instantly rollback to the older version. The older version acts as a reliable backup during the deployment process. Istio’s ability to seamlessly split traffic between service subsets without changing the application code aids in carrying out blue/green deployments effortlessly.

The following sample `VirtualService` rule implements a blue/green strategy for `istio-support` service. All incoming traffic is routed to the `green` version (`weight: 100`), while the `blue` version (`weight: 0`) remains a backup, handling zero requests.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support

spec:

hosts:

- istio-support

http:

- route:

- destination:

host: istio-support

subset: green

weight: 100

- destination:

host: istio-support

subset: blue

weight: 0

Network resilience and testing with Istio

Apart from implementing routing rules for different scenarios, Istio provides opt-in failure recovery and fault injection features which aid enterprises maintain a resilient infrastructure. The features prevent localized failures cascading to other nodes and help DevOps and architects meet SLOs like error time, latency, and uptime.

Some of the network resilience and testing features provided by Istio are circuit breakers, retries, timeouts, and traffic mirroring.

Circuit breaking

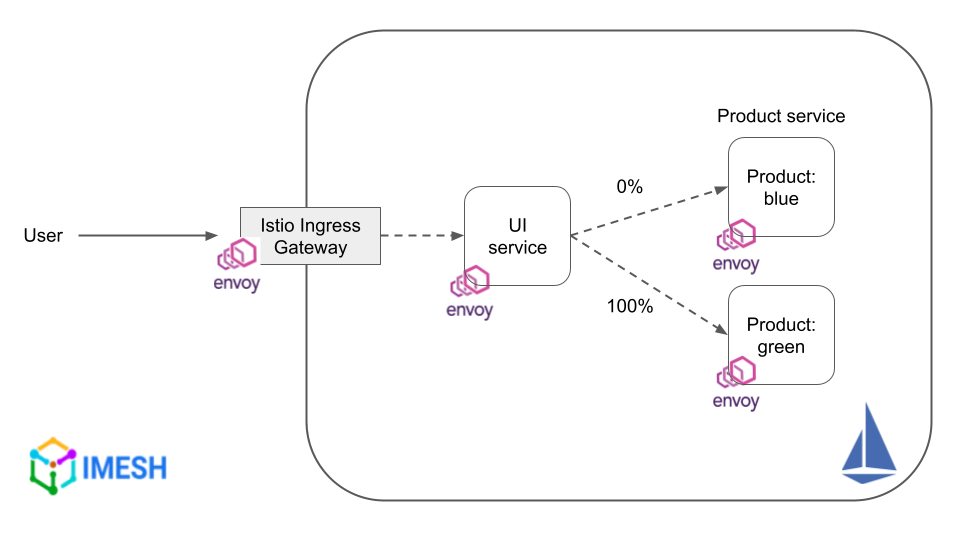

In a web application, circuit breakers set limits to concurrent connections to a service and prevent it from overloading with requests. It is highly useful for B2B and B2C SaaS applications where service responsiveness can make or break the customer experience.

In circuit breaking, if the maximum connection requests to an upstream service go over the specified limit, they will become pending in a queue. And if the number of pending requests breach the limit, further requests are denied until the pending ones are processed.

Circuit breaker is tripped so that client requests fail quickly, without exhausting the services and cascading the failure to the overall system. Istio uses `DestinationRule` to configure circuit breakers.

Here is a sample `DestinationRule` with circuit breaker rules:

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: istio-support

spec:

host: istio-support

trafficPolicy:

connectionPool:

tcp:

maxConnections: 1

http:

http1MaxPendingRequests: 1

maxRequestsPerConnection: 1

outlierDetection:

consecutive5xxErrors: 1

interval: 1s

baseEjectionTime: 3m

maxEjectionPercent: 100- The above `DestinationRule` sets the number of maximum connections and pending requests to `istio-support` service to 1.

- If `istio-support` receives 3 requests simultaneously for example, one request will establish connection, one will be in the queue, and the third one or any additional requests will be denied until the pending one is processed (refer to the image below).

Circuit breaking with Istio

- The `outlierDetection` section towards the end defines the rules to evict unhealthy pods out of the load balancing pool. It means that if any pod of `istio-support` triggers a 5xx (or server) error, the pod will be considered an outlier or unhealthy. It will then be ejected out for 3 minutes before being allowed to rejoin the load balancing pool.

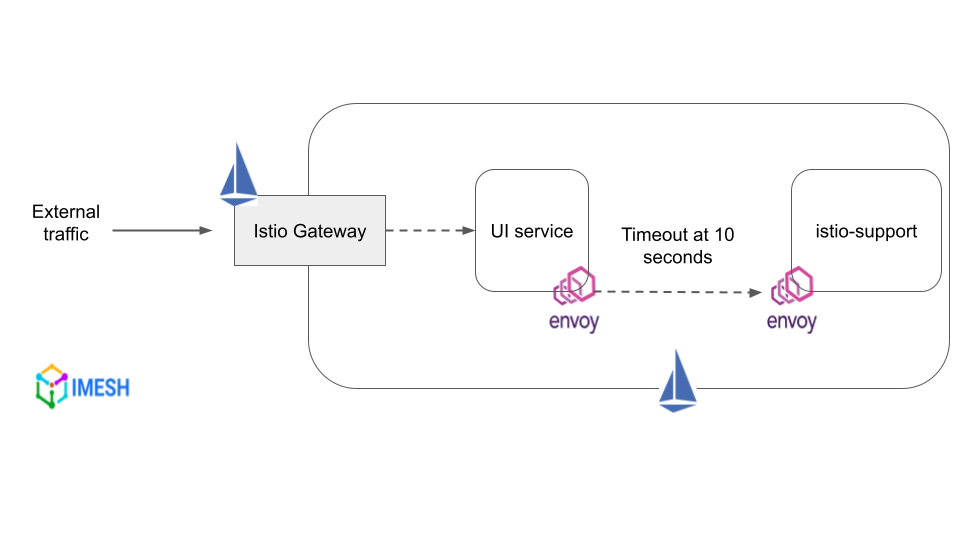

Timeouts

Timeout refers to the amount of time the Envoy proxy of the source should wait for response from the destination service. Timeouts fail or succeed a call within a specific timeframe, which ensures that services do not wait indefinitely for response.

Timeout with Istio

You can implement timeouts in your environment using Istio. Istio allows to create timeout policies and apply them at the source Envoy sidecars.

Below is a sample `VirtualService` that configures a 10-seconds timeout for requests to `istio-support` service. In other words, calls to `istio-support` service will either fail or succeed in 10 seconds.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support

spec:

hosts:

- istio-support

http:

- route:

- destination:

host: istio-support

timeout: 10s- Timeouts should not be short or too long. Short timeouts will result in unnecessarily failed requests, especially when upstream services face transient issues, such as a temporarily overloaded network. Timeouts that are too long cause increased latency, especially if the call waits for response from a failed service.

- If timeouts have to be configured on traffic to destination outside the mesh, the destination service should first be added to Istio’s internal service registry using `ServiceEntry` resource. Virtual service rules can then be applied on those traffic.

- With Istio, you can easily configure timeouts on traffic to any specific service/subset in the runtime.

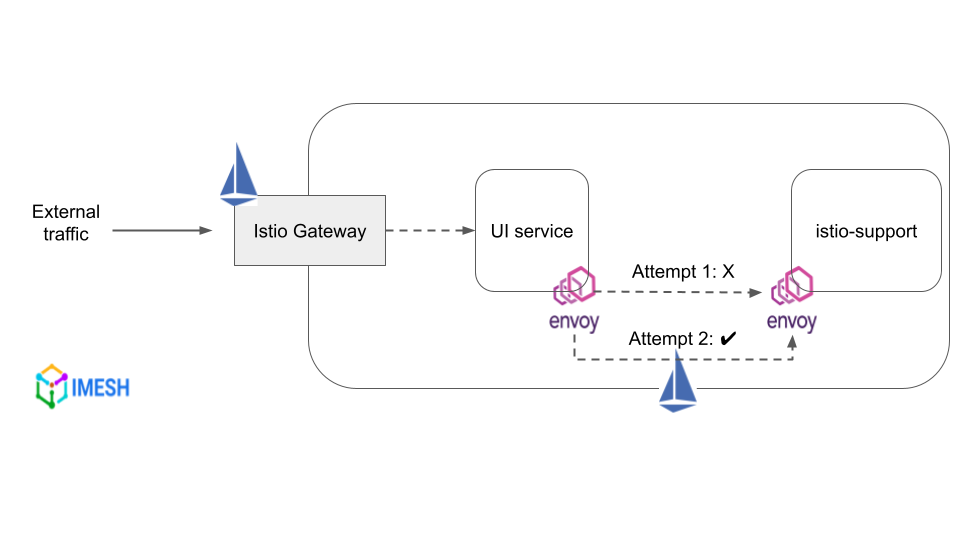

Retries

Retry setting specifies the number of times an Envoy proxy should attempt to connect to a service if the initial request fails. It helps to improve service availability when services face temporary issues, like resource contention, network problems, etc.

Retries with Istio

The following `VirtualService` sets the maximum number of retries to 4, while calling `istio-support` service.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support

spec:

hosts:

- istio-support

http:

- route:

- destination:

host: istio-support

retries:

attempts: 4

perTryTimeout: 2s- In the above resource, `attempts` represents the maximum number of retries allowed for a given request. Exceeding `attempts` will activate a circuit breaker.

- `perTryTimeout` defines the timeout per attempt, including the initial call and any retries.

- `retryOn` subfield can be added under `retries` field, which will help to set conditions under which retry takes place. The conditions should be valid HTTP status, and there can be one or more conditions or policies.

For example, `retryOn: connect-failure,refused-stream,503` means that Istio will initiate a retry if the upstream service returns any of these HTTP status codes (connect-failure, refused-stream, 503).

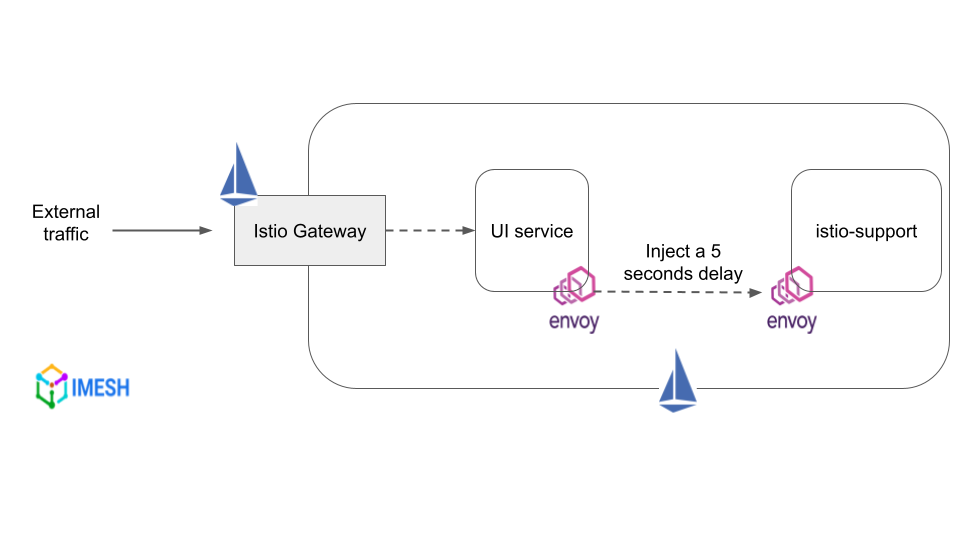

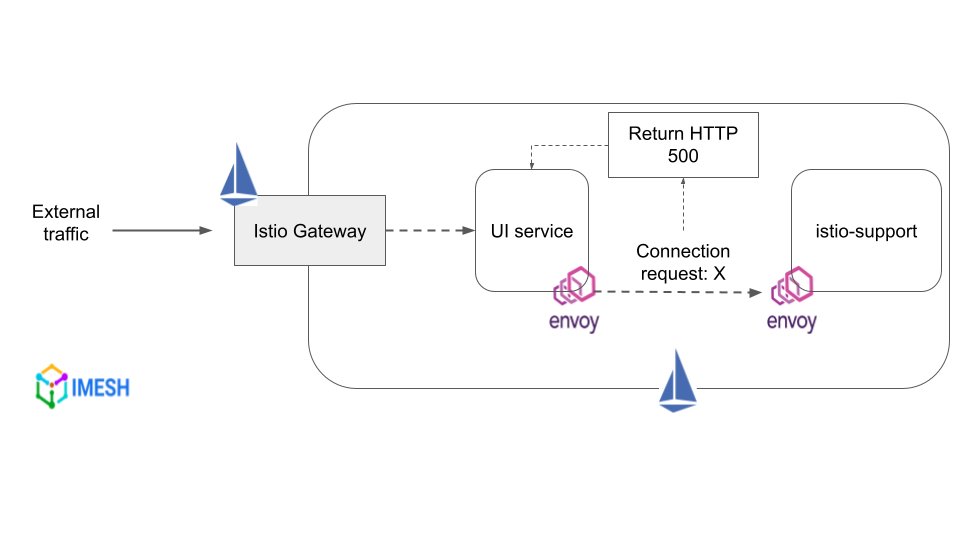

Fault injection

Fault injection is a testing method that includes introducing errors while forwarding HTTP requests to the destination specified in a route. Istio lets DevOps and cloud architects test the resiliency and failure recovery capacity of applications by injecting faults.

With Istio, faults can be injected at the application layer. That is, more relevant failures can be injected, such as HTTP error codes, instead of killing pods, delaying packets, or corrupting packets at the TCP layer.

Istio lets users inject two types of faults using `VirtualService` resource:

- Delays: Used to delay requests to upstream services and simulate network latency or an overloaded upstream service.

Fault injection by delaying requests using Istio - Aborts: Used to abort HTTP request attempts and return error codes to downstream service, in order to simulate a faulty upstream service.

Fault injection by aborting request forwarding using Istio

The following `VirtualService` will inject a 5 seconds delay on all the requests going to `istio-support` service.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support-delay

spec:

hosts:

- istio-support

http:

- fault:

delay:

percentage:100

fixedDelay: 5sSimilarly, the below `VirtualService` resource configures `istio-support` service to return an HTTP 500 error for each received request.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support-500

spec:

hosts:

- istio-support

http:

- route:

- destination:

host: istio-support

fault:

abort:

percent: 100

httpStatus: 500Note: A fault rule must have delay or abort or both. Also, simultaneously specifying delay and abort faults does not create any dependencies between them.

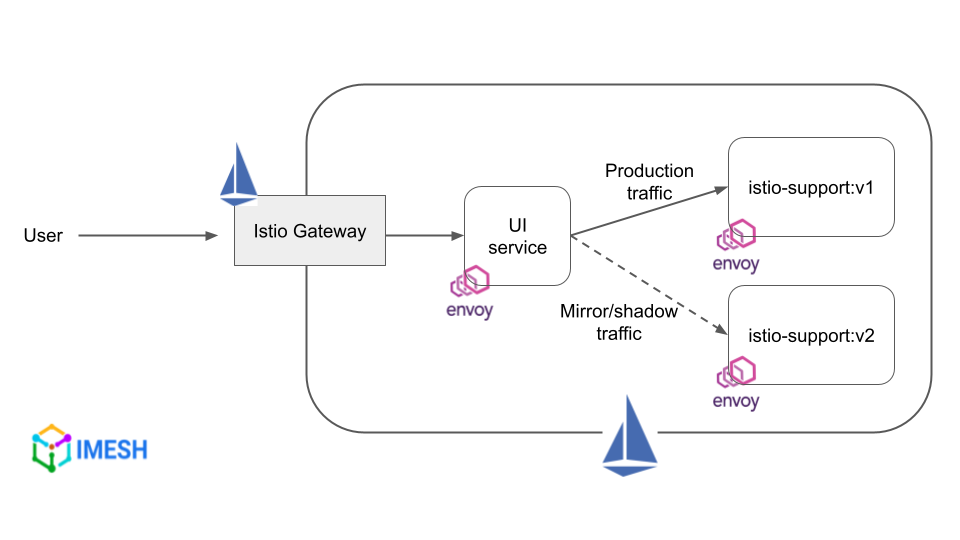

Traffic mirroring

Traffic mirroring refers to sending a copy of the live traffic to a mirrored service. It is useful in testing, monitoring, and analyzing a newly deployed application, before releasing and routing production traffic to it.

Traffic mirroring with Istio

The mirrored traffic does not affect the performance of the primary service, as it is separate from the main flow of requests served by the primary service. Also, responses from mirrored services are discarded.

The following route rule sends 100% of the traffic to `v1` and then to `istio-support:v2`.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: istio-support-mirror

spec:

hosts:

- istio-support

http:

- route:

- destination:

host: istio-support

subset: v1

weight: 100

mirror:

host: istio-support

subset: v2

mirrorPercentage:

value: 100.0

---

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: istio-support

spec:

host: istio-support

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2The `value` field under `mirrorPercentage` allows users to further control the traffic by sending a fraction of the requests, instead of mirroring all requests.

Video: Advanced traffic management using Istio

Now it’s time for some action. Watch the following video to see the demo on advanced traffic management with Istio. You will see a tutorial on traffic management, retries, circuit breaking, and fault injection, with application deployed in the Kubernetes cluster along with Istio ingress gateway.

Configuring advanced traffic management and network resilience testing at scale

Unlike the examples with not more than two microservices as shown so far, most DevOps and architects deal with a fleet of them, deployed in multiple clusters and cloud providers. Configuring advanced traffic management and testing the resiliency of these services can quickly become a headache because of some of the following reasons:

- Configuration drifts/inconsistent configs

- Lack of deep knowledge of Istio’s capabilities

- Architecture complexity/multicluster and multicloud challenges

This is where IMESH can help. IMESH helps enterprises deal with the complexity of configuring Istio at scale, enterprise-wide (without breaking anything, of course). If you are interested, feel free to talk to one of our Istio experts (no obligation) or book a free Istio pilot.