Introducing Application Load Balancer (ALB)

Application Load Balancers (ALB) is a layer-7 software that is used to routing traffic to destinations such as containers, IP addresses, VMs or Lambda functions based on the header or message of the HTTP or HTTPS requests. ALBs are one of the important components of the modern microservices architecture and cloud-native applications.

Features of Application Load Balancers are:

- Distributes traffic requests to multiple instances of the same application.

- Requests can be evaluated and routed based on host, header, path etc.

- Serve external traffic and internal traffic in case of VPCs

- Supports HTTP, HTTPs, HTTP/2 and gRPC protocols.

- Redirecting to different target environments

Challenges of standard Application Load Balancers

If architects and DevOps are considering or using Kubernetes, then classic Application Load Balancers will have a lot of limitations while adopting and scaling their cloud-native applications. A few limitations are outline below:

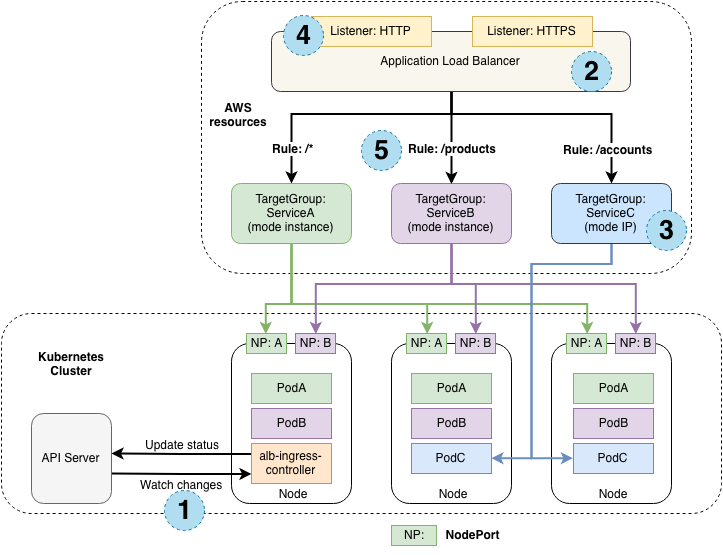

- Since the ALB sits outside of the cluster, it cannot access applications in the cluster. Hence DevOps teams need to expose a certain service using NodePort, or direct POD access via specific Kubernetes CNI for ALB to access the applications it will load balance (refer the Fig A). In case some CNI does allow the access, then ALB can never access the application. In the case of the other strategy of opening Nodeport and sharing with the ALB, there is a risk of unnecessary exposure of all the applications in that node. So such strategies are a big NO for production.

- Architects would need to do some extra configurations to secure traffic from ALB to cluster.

- Configurations for implementing mTLS can be complex.

- Might end up using multiple Ingress controllers in certain scenarios, for e.g in multicluster or multicloud setup. There will be multiple network hops where ALB will connect with an Ingress controller which will severely deter the performance of the network with time.

Refer to the below image to get an idea about a real-life implementation of load balancers with Kubernetes workloads.

Fig A: Working architecture of load balancers in Kubernetes workloads

Introduction to Istio service mesh

Istio service mesh is the answer to all the problems in the modern architecture where microservices are deployed into Kubernetes. Istio is the open source service mesh which abstracts the communication out of the microservices architecture. You can achieve advanced networking and implement zero trust security using Istio very easily. Istio service mesh has 3 major components:

- Data plane: Istio injects a light-weight side-car proxy based on Envoy. The Envoy proxy supports L7 and L4 layers of request and all the networking and security can be achieved selectively by Istio.

- Control plane: Istio offers a central plane from which all the network (such as load balancing) and security policies (such mTLS)can be implemented at the edge and the data plane level.

- Ingress Gateway: Istio provides an Ingress Gateway by default to handle the traffic at the edge. You can implement all the advanced networking (retries, timeouts, failover, etc.) including load balancing using Istio Ingress Gateway.

Architecture of Istio Ingres Gateway as Application Load Balancer

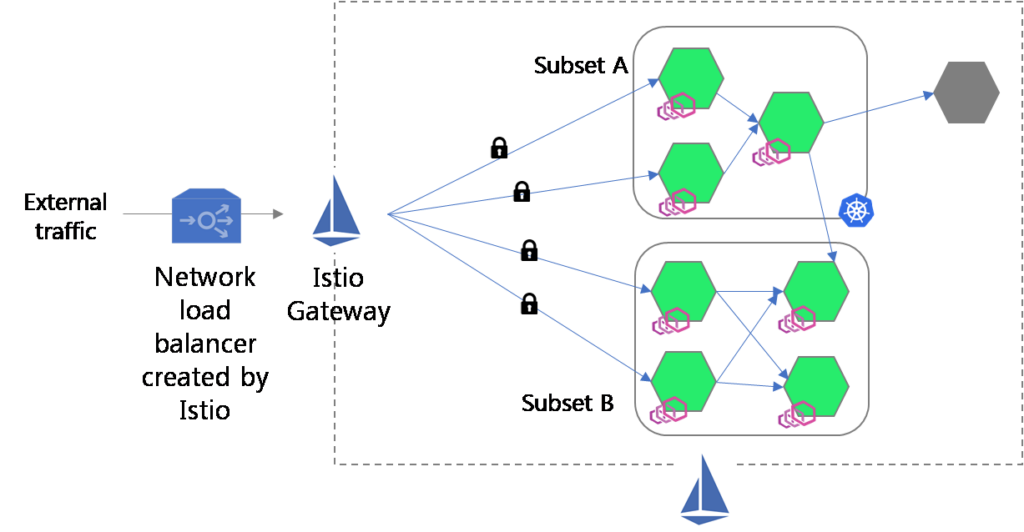

Istio Ingress Gateway can be used as the application load balancer easily; can be extended to handle complicated networking functions as well. In Fig B, we have showcases the Istio Ingress Gateway is used as the load balancer. Once you deploy it, Istio creates a network load balancer which will distribute the load evenly among the nodes. Once the networking load balancers parse the HTTP requests, the L7 processing (such filtering, routing rules etc) happens at the Istio Ingress Gateway.

Istio Ingress Gateway provides two Kubernetes CRDs- Virtual Services and Destination Rule to implement advanced networking functions such as retries, timeouts, circuit breaker, failovers, etc. You can also use Istio to implement a canary deployment strategy with Argo Rollouts.

Fig B: Istio Ingress Gateway implementation as the application load balancer.

How to Load Balance Traffic using Istio Ingress Gateway

In this section, we will showcase how to implement Istio Ingress Gateway as ALB. You can refer to Istio Ingress as ALB git repo for resources we have used.

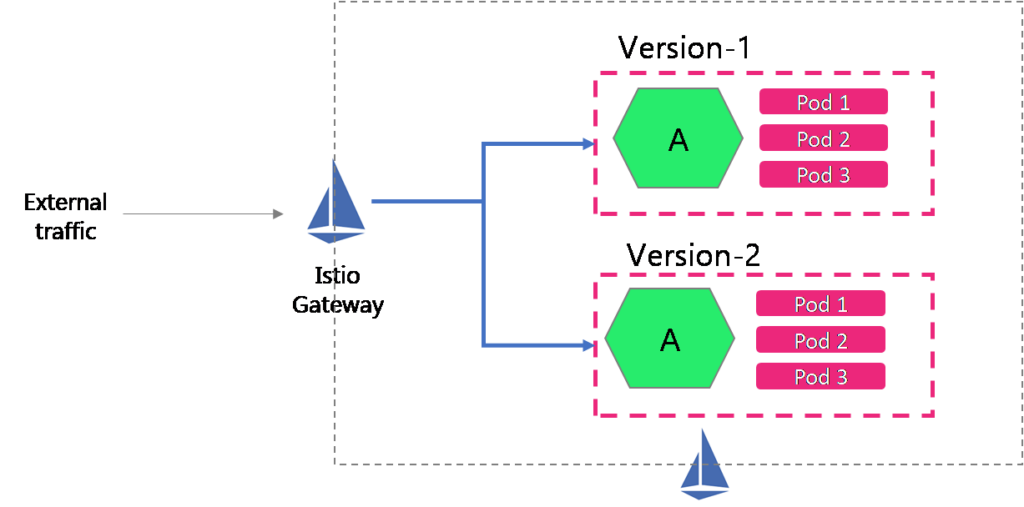

The idea is to create two different versions (V1 and V2) of the same services (we have used echoserver), and let Istio Gateway resources load balance the external traffic to all the pods of the different versions of the service. The below image represents our idea.

Video on Load balancing Kubernetes workloads using Istio Ingress

In case you are a video person or want to see Istio in action, here is the video of Istio Ingress used as a load balancer.

Create a service

You would require to create a service with different versions. I have used echoserver image. Here are the deployment and service yaml for the echo-server application- echoserver-deployment-v1.yaml, echoserver-deployment-v2.yaml.

Implement Istio Gateway and virtual service resources

You can create Istio Gateway and virtual service resources to be able to receive HTTP traffic from public and route traffic to the echo-server service respectively.

Please refer the echo-Gateway.yaml file, or the code below:

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: echo-Gateway

spec:

selector:

istio: IngressGateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "*"You can refer to the virtual service resource or the code below. Note the we have mentioned the rule in the virtual service resource in such as way that version-1 of the service gets 80% of the traffic and version-2 of the service gets 20% of the traffic.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: echoserver-vs

spec:

hosts:

- "*"

Gateways:

- echo-Gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

port:

number: 80

host: echoserver-service

subset: v1

weight: 20

- destination:

port:

number: 80

host: echoserver-service

subset: v2

weight: 80Please note, we have mentioned in the deployment that the container will run in port 80, hence we have mentioned the same port number (80) in the Istio Gateway and virtual service resource.

Since we have used the service subsets (v1 and V2), we need to define the same using the Istio destination rule resource. Refer to the code below.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: echo-dr

spec:

host: "*"

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2Access the service and validate load balancing

Once you deploy the resources, get the external IP of the Gateway to access the echo-server service. The command to get the external IP of the Gateway is:

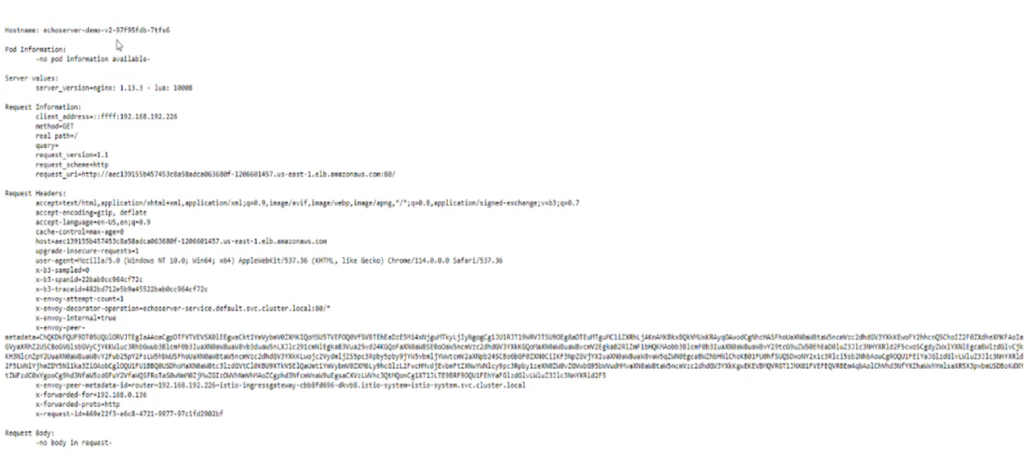

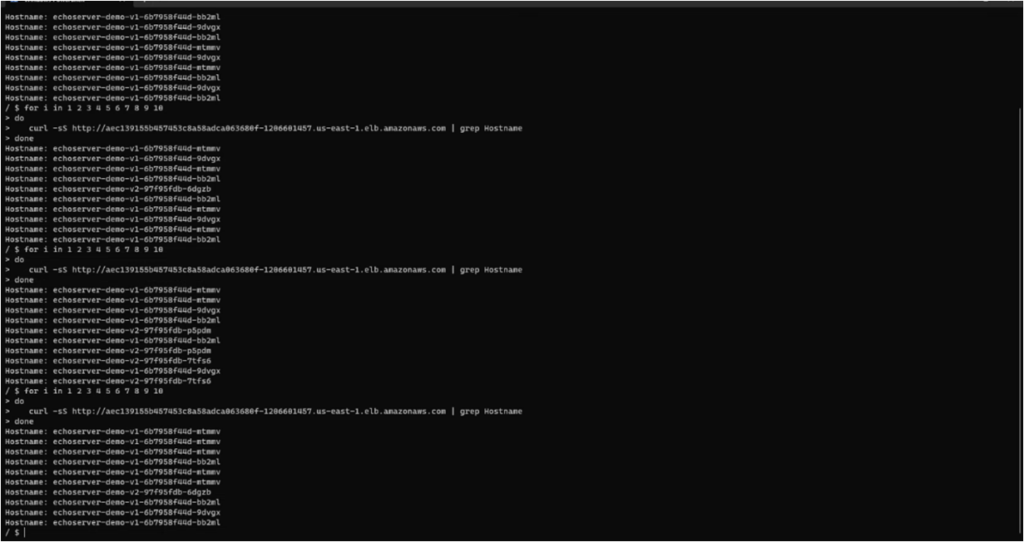

kubectl get svc -n <<namespace>>You can use the external IP address to access the service. And on refreshing you can see the versions are getting load balanced with Istio Ingress Gateway. Almost 80% of the time, you will realize the V1 pods are getting accessed and the pods of the V2 for the rest of the time. Please refer the screenshot below:

Validating the Istio load balancing from the sleep pods (Optional)

Validating the version changes from the browser can be tricky as the browser might take some time to process the request, in that you can use a sleep service and curl echo-server with HTTP requests. This step is optional and is intended to showcase the Istio load balancing functionality with greater details.

You can deploy a sleep service, refer to the deployment yaml here. Once you deploy the sleep service, you can get into one of the pods by using the command:

kubectl exec -it <<pod name>> - shAnd then you can apply the curl 10 times to see the output.

for i in 1 2 3 4 5 6 7 8 9 10

do

curl -sS <<external IP>> | grep Hostname

doneRefer to the below screenshot where we have got the response from V2 only 2 times or less.. Which means Istio is limiting sending the requests from sleep sever to V2 upto 20% of the times.

Benefits of Istio Ingress Gateway as Application Load Balancer

There are several benefits of using Istio Ingress Gateway as application load balancer (ALB) as we have seen in the above demo.

- Services don’t need to be exposed outside the cluster.

- Out-of-the-box support for securing traffic.

- Native integration with Kubernetes clusters.

- One place to monitor and configure all traffic rules.

- Auto scaling based on several metrics.

- Granular control over load balancing at even subsets of applications, like A/B Testing, Canary release, etc.

Conclusion

The use-case of Istio service mesh can help architects and DevOps teams to reimagine their IT infrastructure, by making it more agile and secure. There are several use cases of Istio service mesh in the network and security management. For e.g. you can use Istio as the API Gateway and secure your internal communication, or implement advance deployment strategy in CI/CD.

In case you want a demo of Istio service mesh please reach out to one of our Istio experts. If you want enterprise support for Istio service mesh, contact us.

I liked the explanation – one correction : Your diagram has 2 x pod 2’s in each deployment (should be pod 1, 2 and 3?)

Thanks for pointing out, James! We’ve changed it.