In my last blog, we explored rate limiting, the types of Istio rate limiting, and I showed you how to set up an Istio local rate limiter per pod/proxy and ingress gateway level.

(I highly recommend you go through the blog if you are new to Istio rate limiting: How to Configure Istio Local Rate Limiting.)

Here, we will see the other type of Istio rate limiting, i.e., Istio global rate limiting. I will explain what it is and how it works, and will show you how to configure Istio global rate limiting per client IP basis.

Istio global rate limiting

Rate limiting applied on an application or service in the Istio mesh, where the application/Envoy proxy makes gRPC calls to a global rate limit service for request quota, is called Istio global rate limiting.

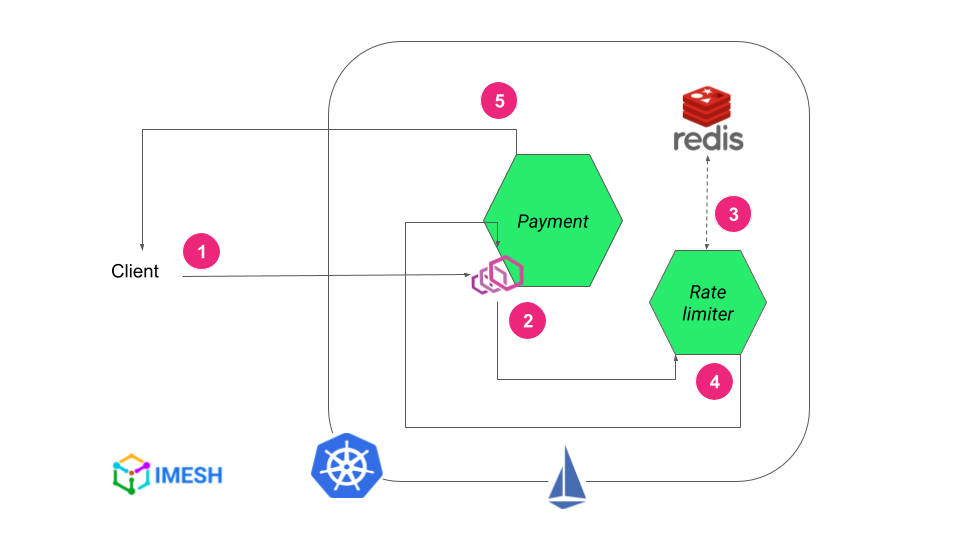

Istio global rate limiting workflow diagram

Here is how Istio global rate limiting works:

- The client makes a request to the Istio ingress gateway or a service in Istio mesh.

- Envoy sidecar proxy of the service intercepts the request and makes a gRPC call to a Global rate limiter service to decide whether to rate limit the request.

- Global rate limiter service interacts with its Redis database, where the actual quota state and request counters are cached, and then takes the rate-limiting decision for the request.

- Global rate limiter service returns the decision to the sidecar Envoy proxy.

- Based on the decision, the proxy triggers the rate limiter and the service returns an HTTP 429 error code to the client, or it is not triggered and the service accepts the request and shows HTTP 200.

Istio global rate limiting helps DevOps and architects set rate limiting per IP or dynamic header values. They can apply a rate limiter at the service/application level — instead of a service instance/proxy level — using Istio global rate limiting.

The difference in token bucket allocation between Istio global rate limiting and local rate limiting at the service level

Note that you can also set a rate limiter at the service level by configuring the appropriate workloadSelector in Istio local rate limiting. However, the token bucket quota will not be shared among the replica pods/Envoy proxies, and each proxy will have its own token bucket.

That is, if there is a service with 3 replicas and you set the rate limiter to 5 for the service, each pod will get a token bucket with a maximum of 5 tokens. It will accumulate to a maximum of 15 requests after which the rate limiter will be triggered.

In global rate limiting the token bucket quota is shared, i.e. the service will only accept 5 requests in total, regardless of the number of replicas it has.

Steps to configure Istio global rate limiter

- Step #1: Apply ConfigMap for the rate limit service

- Step #2: Deploy rate limit service and Redis

- Step #3: Apply EnvoyFilter CRD

Let us see the steps in action.

Step #1: Apply ConfigMap for the rate limit service

In the local rate limiting, we configured the token bucket in the Envoy proxy/pod itself. The proxy then rate-limited requests based on the configuration.

Global rate limiting requires some extra components, including a ConfigMap for the global rate limit service.

DevOps and architects can define the request quota for the service that needs rate limiting in the ConfigMap resource (sample ConfigMap YAML below).

apiVersion: v1

kind: ConfigMap

metadata:

name: ratelimit-config

namespace: istio-system

data:

config.yaml: |

domain: productpage-ratelimit

descriptors:

- key: ratelimitheader

descriptors:

- key: PATH

value: "/get"

rate_limit:

unit: minute

requests_per_unit: 5(You can see that the domain value here is set to productpage-ratelimit. The value should be the same in the EnvoyFilter CRD, which you will see in step #3, for the rate limiter to work.)

The way the reference implementation of the ConfigMap (i.e., the global rate limiting service) works is that each list of descriptors on the same level is treated as an OR operation, while the nested descriptors are ANDed together.

The above configuration has nested descriptors. A request will be checked for any header value (since I haven’t given a specific header value) and for the path /get. Only the requests that satisfy both conditions will be rate-limited by 5 requests per minute.

A key thing to note here is that the order and the number of the descriptors should be the same as defined for rate limit actions in the EnvoyFilter resource.

Once you apply the ConfigMap YAML, you can proceed to step #2.

Step #2: Deploy rate limit service and Redis

Istio provides a Redis-based, sample rate limit service to set up global rate limiting. The Redis database stores the request quota and caches the request count from the rate limit service.

So there are 2 deployments to apply: rate limit service and Redis. See their configurations here.

Note:

- The ConfigMap applied in step #1 should be in the same namespace as the rate limit service. It is mounted by the deployment during init.

- Every time you change anything on ConfigMap, restart the rate limit service using the following command to take effect:

kubectl rollout restart deploy [rate_limit_service_name] -n [namespace] Step #3: Apply EnvoyFilter CRD

I have already explained most of the fields on an EnvoyFilter resource in the Istio local rate limiting blog. Please have a look at it if you haven’t already.

There are 2 EnvoyFilters in the sample EnvoyFilter CRD here:

- The first EnvoyFilter sets the rate limit service’s location for the httpbin. It is defined under the rate_limi_service field as cluster_name: outbound|8081||ratelimit.istio-system.svc.cluster.local.

...

domain: productpage-ratelimit

failure_mode_deny: true

timeout: 10s

rate_limit_service:

grpc_service:

envoy_grpc:

cluster_name: outbound|8081||ratelimit.istio-system.svc.cluster.local

authority: ratelimit.istio-system.svc.cluster.local

transport_api_version: V3

...- The second EnvoyFilter defines the rate-limiting actions for httpbin. I have defined descriptor keys for request headers — one for the pseudo-header path and the other one to enable the rate limiter (x-rate-limit-please).

...

patch:

operation: MERGE

# Applies the rate limit rules.

value:

rate_limits:

- actions: # any actions in here

- request_headers:

header_name: "x-rate-limit-please"

descriptor_key: "ratelimitheader"

skip_if_absent: true

- request_headers:

header_name: ":path"

descriptor_key: "PATH"

...The Istio global rate limiter should work after you apply the EnvoyFilter CRD. You can test the configuration using curl:

curl -v <url>/get -H "x-rate-limit-please: true"Istio rate limiting per IP

One of the crucial use cases of Istio global rate limiting is that it helps DevOps and architects set rate limiting per client IP. The global rate limiter is more suitable for IP address-based rate limiting since it supports dynamic rate limits, unlike the local rate limiting filter.

Dynamic rate limits mean that the global rate limiter will first check for specific values in the descriptor and give a token bucket accordingly — but if no values are defined then every value for that descriptor is given a separate token bucket.

So if you add a descriptor entry for IP address without mentioning a specific remote address, requests from each IP will receive a token bucket. The requests from the IPs will be rate-limited once they exhaust their individual request quota (see the example below).

With the local rate limiter, you cannot configure dynamic rate limits. You have to specify a value for every descriptor, which means you will end up having one entry per IP address.

For example, you will have to configure a descriptor entry for the remote address 10.0.0.1, another one for 10.0.0.2, and so on. Requests from IPs not specified in the descriptor will not be rate-limited.

Example for IP-based Istio global rate limiting at ingress

Let us see a sample Istio global rate limiting at ingress based on the client’s remote address.

The configuration is similar to how we set it up for the local rate limiter for the Ingress gateway in the previous blog (scroll down towards the end to see it). We changed the workloadSelector and context to istio: ingressgateway and GATEWAY, respectively.

The change when configuring global rate limiting per IP is that you have to define an additional remote_address action in the EnvoyFilter CRD, where rate limiting actions for the service can be defined:

...

patch:

operation: MERGE

# Applies the rate limit rules.

value:

rate_limits:

- actions: # any actions in here

- remote_address: {}

- request_headers:

header_name: ":path"

descriptor_key: "PATH"

...After that, you can configure the global rate limiter service to use a dedicated token bucket for each value of the remote address by not specifying any address at all.

Since the configuration for the rate limiter service is configured in the ConfigMap, you can make that change under the descriptors field in the ConfigMap resource:

apiVersion: v1

kind: ConfigMap

metadata:

name: ratelimit-config

namespace: istio-system

data:

config.yaml: |

domain: productpage-ratelimit

descriptors:

- key: remote_address

descriptors:

- key: PATH

value: "/get"

rate_limit:

unit: minute

requests_per_unit: 5Once you successfully configure and apply this, you can see that requests through the ingress gateway show up in the rate limit service log in the following format:

"got descriptor: (remote_address=10.224.0.10),(PATH=/get)The key thing to note here is that we got the remote address, but it belongs to a node in the cluster, not the client.

To get around this, we need to configure the load balancer or gateway service to preserve the client IP as mentioned in the Istio docs for the Istio ingress gateway.

You can use the following command and update the ingress gateway to set externalTrafficPolicy: Local to preserve the original client source IP on the ingress gateway:

kubectl patch svc istio-ingressgateway -n istio-system -p '{"spec":{"externalTrafficPolicy":"Local"}}'A similar configuration can be done to rate limit per client IP if you are using Nginx ingress.

Advanced configurations with Istio global rate limiting

Setting dynamic values using Istio global rate limiting allows DevOps and architects to set up advanced rate-limiting configurations.

For example, you can use a header-to-metadata filter in the EnvoyFilter resource and use regular expressions to get only the URL path that you need, without any query parameters:

...

patch:

operation: INSERT_BEFORE

value:

name: envoy.filters.http.header_to_metadata

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.header_to_metadata.v3.Config

request_rules:

- header: ":path"

on_header_present:

metadata_namespace: ratelimiter

key: url

regex_value_rewrite:

pattern:

google_re2: {}

regex: '^(\/[\/\d\w-]+)(\?)?.*'

substitution: '\1'

...The above regular expression substitution gets only the URL path without any query parameters and creates metadata in the request under the key url, in the ratelimiter namespace.

You can then match the inbound request on the desired port (I have used port 8000) by using the url metadata key in the VIRTUAL_HOST configuration:

...

- applyTo: VIRTUAL_HOST

match:

context: SIDECAR_INBOUND

routeConfiguration:

vhost:

name: "inbound|http|8000"

patch:

operation: MERGE

value:

rate_limits:

- actions:

- metadata:

descriptor_key: url

metadata_key:

key: ratelimiter

path:

- key: url

...The above configuration practically cleans up the URL by removing unnecessary query parameters from the request path, taking just the url value.

Alternatively, you can use the header-to-metadata filter to pull any specific query parameter or cookies (user ID, for example) from the request path using regex and set a rate limiter based on that.

Istio global or local rate limiting: which one to use?

DevOps and architects may consider the following pointers while trying to decide which type of Istio rate limiting they want to implement:

- If your goal is to reduce the load per pod/Envoy proxy, then Istio local rate limiting is the way to go. It helps to set up the rate limiter per pod.

- Local rate limiter is also cheaper and more reliable since rate limiting happens at the proxy level without needing any extra components, such as a rate limiter service used in the global one.

- If your goal is to set up Istio rate limiting based on client IP, then choose global rate limiting. As of now, the local rate limiter filter cannot be used for rate limiting per IP address.

- Istio global rate limiting is also the obvious and easier choice to set up path or header-based rate limiters. You can match the path or header based on regex on the global one. For example, you can configure a path match on /api/*, and then each endpoint under /api will have its own token bucket. Local rate limiter limits only to exact paths and headers.

- You can also configure local rate limiting in conjunction with the global one to reduce the load on a particular pod, for example. Whichever shows up first in the Envoy proxy configuration will get applied first and take effect.

Leverage Istio rate limiting to protect crucial services

If you are a DevOps engineer or architect using Istio, it is a given to protect services — such as login and payment — against DoS and brute force attacks using Istio rate limiting. It will also help you prevent service overload and maintain an available infrastructure all the time.

At IMESH, we offer managed Istio and support DevOps and architects with setting Istio rate limiting for services, and our Istio experts help remove the operational complexities associated with managing Istio at scale in production.

Feel free to check out our offering and pricing here: https://imesh.ai/managed-istio.html.