Architects, DevOps, and cloud engineers are gradually trying to understand which is better to continue the journey with API gateway or adopt an entirely new service mesh technology. In this article, we will try to understand the difference between the two capabilities and lay out some reasons for the software team to consider or not consider a service mesh such as Istio ( because it is the most widely-used service mesh).

Please note we are heavily driven by the concept of MASA architecture that guarantees infrastructure agility, flexibility, and innovation for software development and delivery teams. Due to our futuristic stance, we might be critical of the API gateway, but our evaluation will be genuine and helpful for enterprises looking to introduce radical transformation. Bdw, MASA — a mesh architecture of apps, APIs, and services — provides technical application professionals delivering applications with the optimal architecture to meet those needs.

Let us look into some current trends in app modernization.

Trends of app modernization

- Microservices: Nearly every mid to large company uses microservices to deliver services to the end customer. Microservices (over monoliths) are suitable for developing smaller apps and releasing them to the market.

- Cloud: This needs no explanation. One can only imagine providing services by being on the cloud. Due to regulations and policies and added data-security, Companies also adopt a hybrid cloud- a mix of public/private cloud and on-prem VMs

- Containers (Kubernetes): Containers are used to deploy microservices, and their adoption is rising. Gartner predicts that 15% of workloads worldwide will run in containers by 2026. And most enterprises use managed containers in the cloud for their workloads.

And the adoption of these technologies will be more and more in the coming years (Dynatrace predicts the adoption of containers and cloud almost 127% y-o-y).

The implication of all of the above technology trends is that transaction of data over the network has increased. The question is, will the API gateway and load balancers at the center of the app modernization be sufficient for the future?

Before we find the answer, let us look at some of the implementations of API gateway.

Sample scenarios of API gateway and load balancer implementation

After discussing with many clients, we understood various ways API gateways and load balancers are configured. Let us quickly see a few scenarios and understand the limitations of each of them.

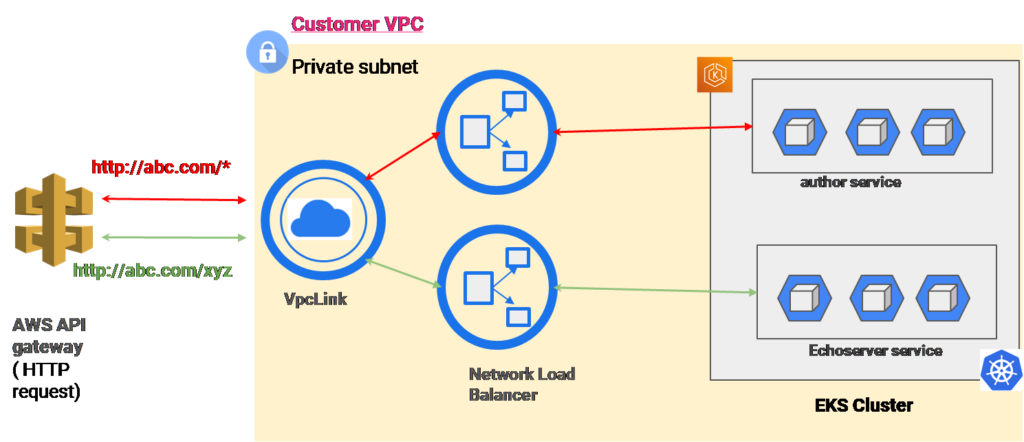

All the microservices, cloud, and container journey starts with an API gateway. Take a practical example of two microservices- author and echo server services- hosted on the EKS cluster. ( In reality, things can be more complicated, we will see that in another later). Suppose architects or the DevOps team want to expose the two services in the private cluster to the public (through DNS name: http://abc.com). In that case, one of the ways is to apply network load balancers for each of these services (assuming each of them is hosted in the same cluster but different nodes). And an API gateway such as AWS API gateway can be configured to allow the traffic to these node balancers.

If the cluster is in private VPC, then Vpc Link needs to be introduced to receive the traffic from the API gateway to inside the private subnet, which can be further allowed to network load balancers. And each node balancer will redirect the traffic to their respective services.

Fig A: API gateway and multiple network balancer implementation

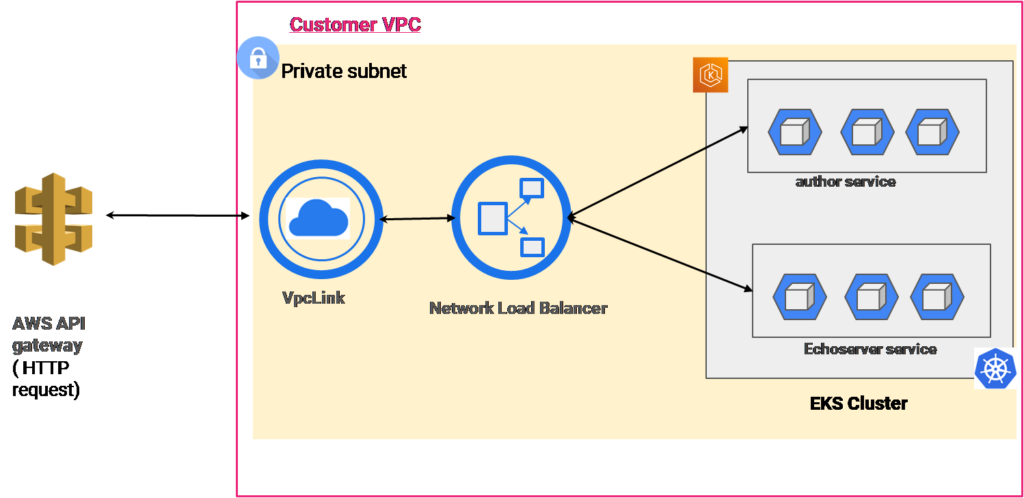

The downside of such an architecture is there can be multiple load balancers, and costs can go high. Thus, some architects may use another implementation by a single load balancer with numerous ports opened to serve various services ( in our case, author and echo server). Refer Fig B:

Fig B: API gateway and network balancer implementation

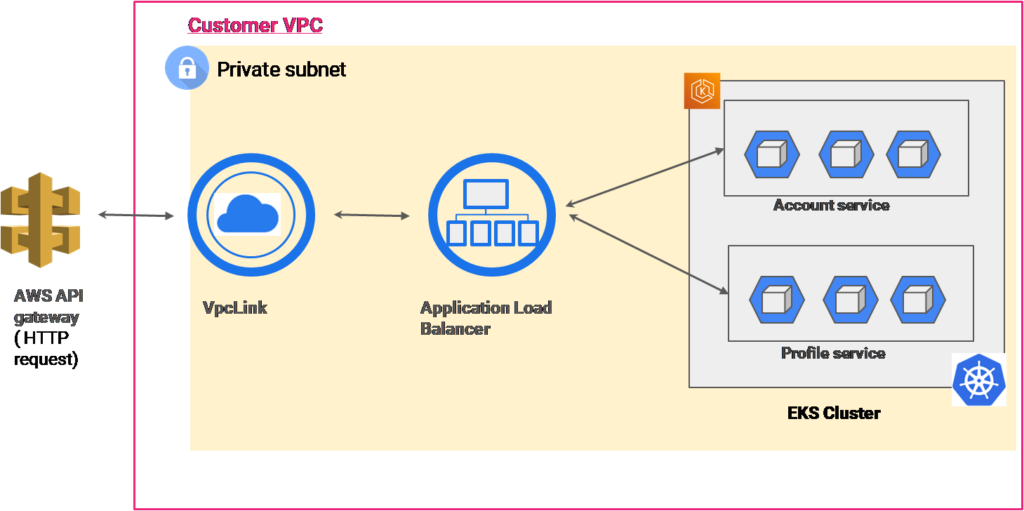

One of the widely used architectures is an application load balancer instead of a network load balancer (refer to Fig C). In such scenarios, some of the responsibilities of the API gateway can be shifted from API gateway to application load balancer, such as L7 traffic routing. In contrast, an API gateway can perform advanced traffic management functionalities like retries and protocol translation.

Fig C: API gateway and application balancer implementation

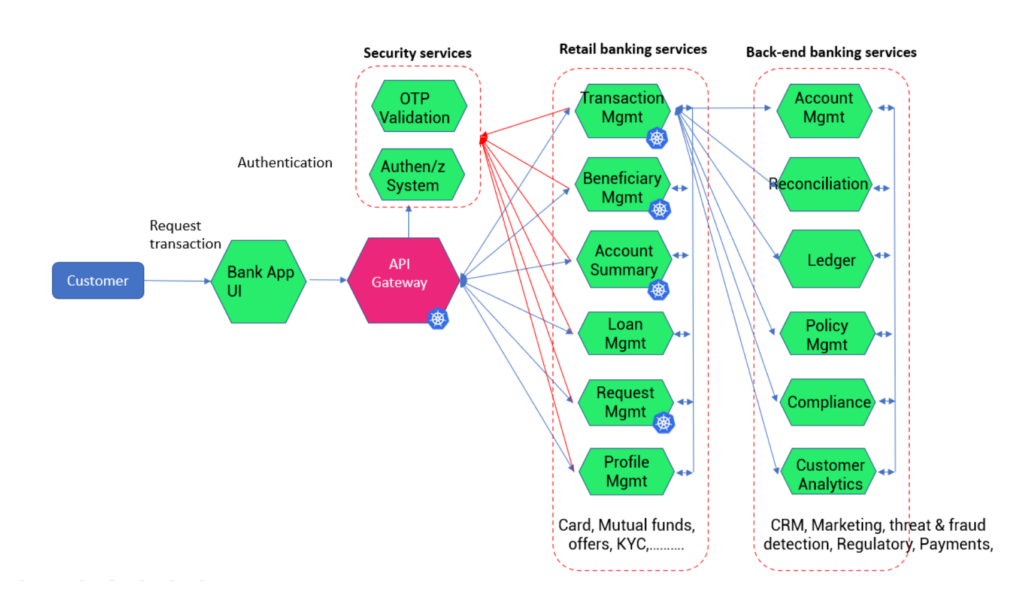

We have looked into simple use cases, but practically there can be many microservices hosted in multicloud and container services, which can look something like the below:

Fig D: API gateway in an interdependent microservices setup

But regardless of the architecture, there can be a few limitations of using API gateway in your app modernization journey.

Limitations of API gateway

Traffic handling limited to edge

API gateway is good enough for taking the traffic at the edge, but if you want to manage the between the services (like in Fig D), it can soon become complicated.

Sometimes the same API gateway can be used to handle the communication between two services. This is done primarily for peer-to-peer connections. However, two services residing in the same network but using external IP addresses to communicate creates unnecessary hops, and such designs should be avoided. The data takes longer, and communication uses more bandwidth.

This is called U-turn NAT and NAT loopback (network hair pinning). And such designs should be completely avoided.

Inability to handle north-south traffic

When the services are in different clusters or data centers (public cloud and on-prem VMs), enabling communication between all the services using an API gateway is tricky. A workaround can be enabling multiple API gateways used in a federated way, but the implementation can be complicated, and the project’s cost will outweigh the benefits.

Lack of visibility into the internal communication

Let us consider an example where the API gateway allows the request to service A. Service A needs to talk to Service B and C to respond to the request. If there is an error in Service B, Service A will not be able to respond. And it is difficult to detect the fault with the help of an API gateway.

No control over network inside the cluster

When DevOps and cloud teams want to control the internal communication or create network policies between microservices and API gateway cannot be used for such scenarios.

East-west traffic is not secured

Since API gateway usage is limited to the edge, the traffic inside a cluster (say EC2 or EKS cluster) will not be secured. If a person hacks one service into a cluster, he can quickly take control of all other services ( known as a vector attack). Architects can use workarounds such as implementing certificates and configuring TLS, etc. But again, this is an additional project which can consume a lot of time of your DevOps folks.

Cannot implement progressive delivery

API gateway is not designed in a way to understand the application subsets in Kubernetes. E.g., Service A has two versions- V1 and V2 running simultaneously (with the former being the older and the later being the canary); in such case API gateway cannot be implemented for implementing canary deployment. The bottom line is you cannot extend the API gateway to implement progressive delivery such as canary, blue-green, etc.

To overcome all the limitations of API gateway, your DevOps can develop workarounds (such as multiple API gateways implemented in a federated manner) but note that the maintenance of such a setup could be more scalable and would be regarded as technical debt.

Hence, a service mesh infrastructure should be considered. Open-source Istio, developed by Google and IBM, is a widely used service mesh software. Most advanced organizations, such as Airbnb, Splunk, Amazon.com, Salesforce, etc, have used Istio to gain agility and security in their network.

Introducing Istio service mesh

Istio is an open-source service mesh software that abstracts the network and security from the fleet of microservices.

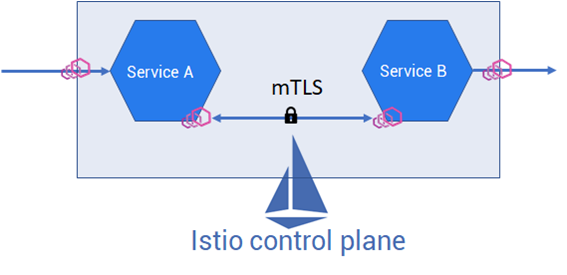

From implementation point-of-view, Istio injects Envoy proxy (a sidecar) into each service and handles the L4 and L7 traffic. DevOps and cloud teams can now easily define network and security policies from the central plane.

Fig E: Istio service mesh diagram

Since the application, transport, and network traffic (L7/l5/L4) can be controlled by Istio, it is easy to manage and secure both the north-south and east-west traffic. You can apply fine-grained network and security policies to east-west and north-south traffic in multi-cloud and hybrid cloud applications. The best part is Istio provides central observability of network performance in a single plane.

Using Istio, the DevOps team can implement canary or blue-green deployment strategies using CI/CD tools and Istio service mesh.

Read all the features of the Istio service mesh.

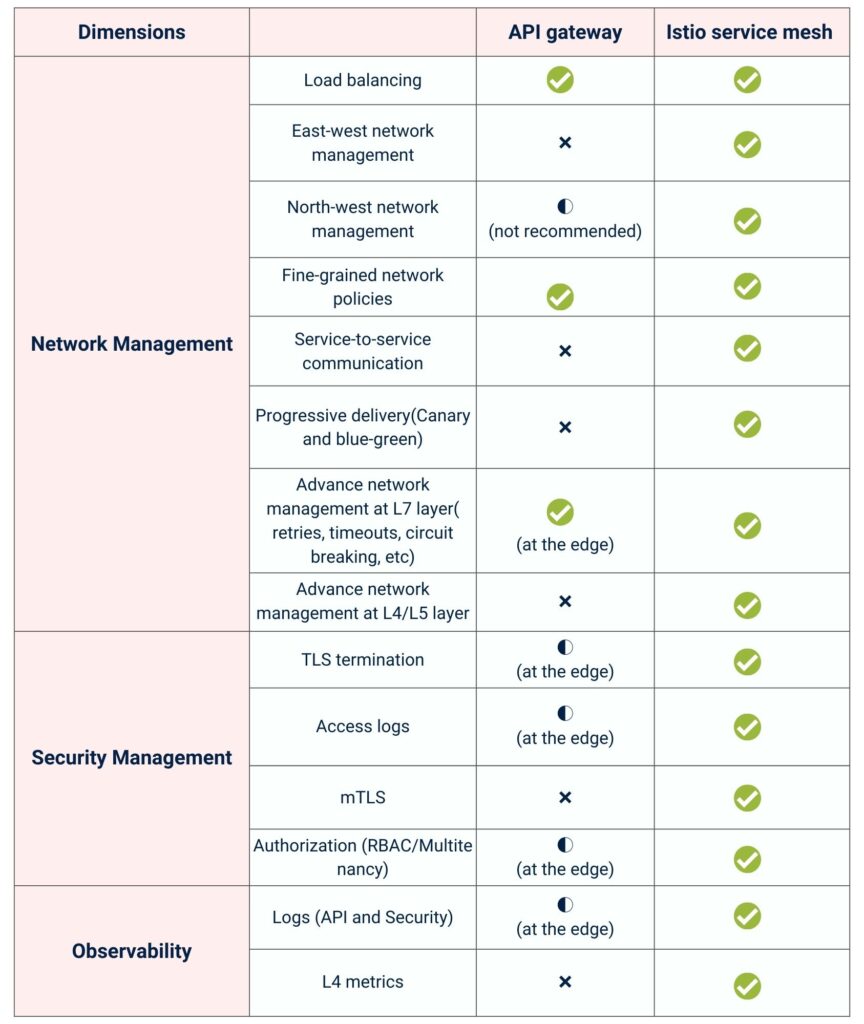

Tabular comparison of API gateway and Istio service mesh

Please follow the comparison of the API gateway and Istio service mesh across a few dimensions, such as network management, security management, observability, and extensibility.

Conclusion

API gateway was good for load balancing and handling other network management at the edge. Still, as you adopt microservices, cloud, and container technologies to attain scale, architects need a bit of reimagination- for network agility and security. Istio service mesh is compelling because it is open source and is heavily contributed by Google, IBM, and Red Hat.

In the next blog, our CTO, Ravi Verma, have discussed various architectural scenarios for integrating an API gateway and Istio for app modernization.

About IMESH

IMESH helps enterprises to simplify and secure their network of cloud-native applications using Istio and Envoy. We also provide enterprise support for Istio service mesh. To help you overcome the toil of learning and experimenting with Istio, IMESH provides enterprise versions of Istio. With IMESH, you can implement Istio for your cloud applications from Day-1 without any operational hassle. We provide:

- Istio implementation into production

- Training and onboarding of Istio

- Istio lifecycle management with frequent patches and version upgrades

- Enhancements like multi-cluster implementation in AKE/GKE/EKS, FIPS compliance

- High-performance and highly available Istio

- Guaranteed SLAs for vulnerability fixes

- FIPS support

- 50+ integrations with CI/CD tools

- Customization to Istio and Envoy

Talk to one of our Istio experts for free, or contact us for enterprise Istio support.