Why do you need Istio Ambient mesh ?

It is given that Istio is a bit resource intensive due to sidecar proxy. Although there are a lot of compelling security features that can be used, the whole Istio (the side-car) has to be deployed from day-1. Recently, the Istio community has reimagined a new data plane – ambient mode – which will be far less resource intensive. Istio ambient mesh is a modified and sidecar less data plane developed for enterprises that want to deploy mTLS and other security features first, and seek to deploy an advanced network later. Read more on what is Istio Ambient mesh.

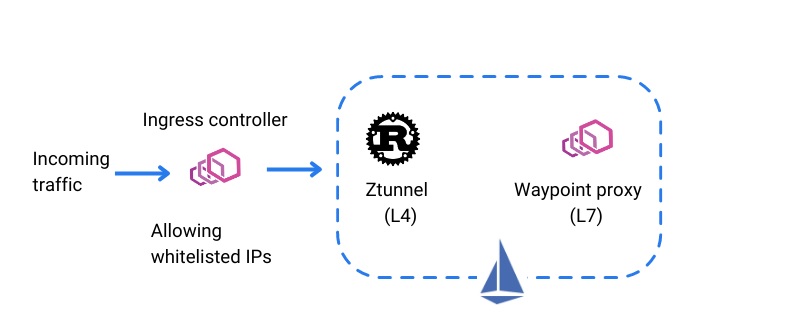

Ambient mesh has two layers:

- L4 secure overlay layer or ztunnel: for implementing mTLS for communication between (services) nodes. Note, ztunne is a rust-based proxy.

- L7 processing layer or waypoint proxy: for accessing advanced L7 processing for security and networking, thus unlock full range of Istio capabilities

In this blog, we will explain how to implement Isito ambient mesh (with L4 and L7 authorization policies) in Google Kubernetes Engine and/or Azure AKS.

Prerequisite

Please ensure you have the following software or infrastructure in your machine (I’ve use the following):

- Kubernetes 1.23 or later. Version used for implementation: 1.25.6

- Istio 1.18.0-alpha.0 (Link: https://github.com/istio/istio/releases/)

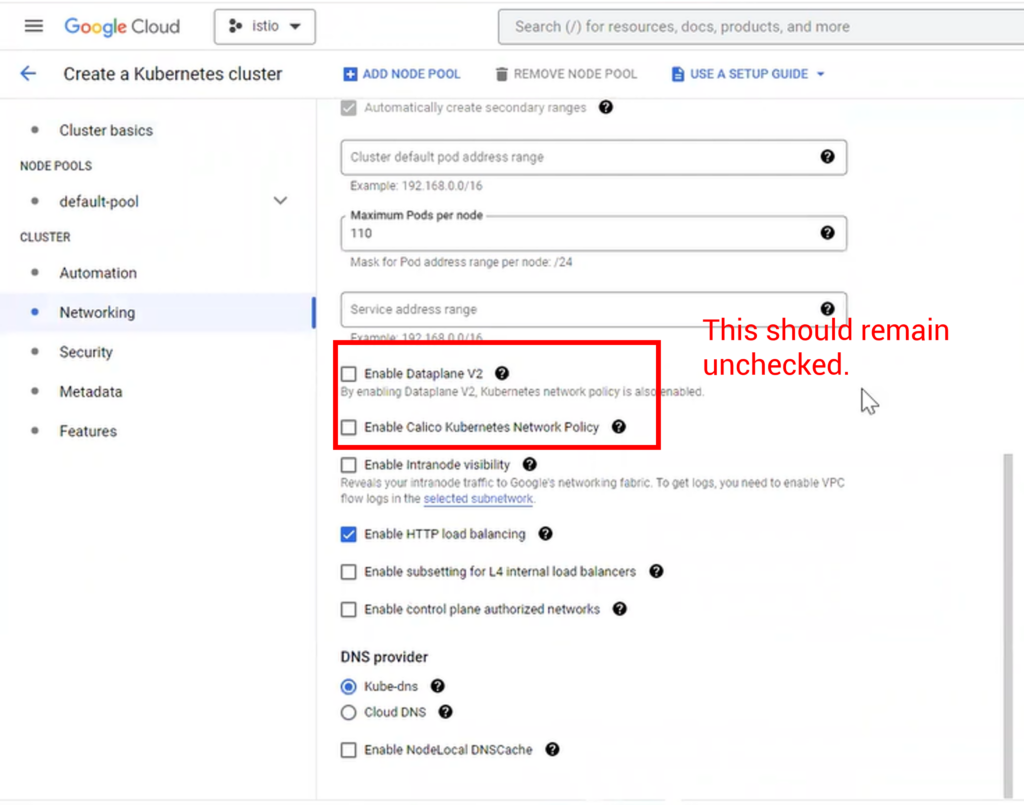

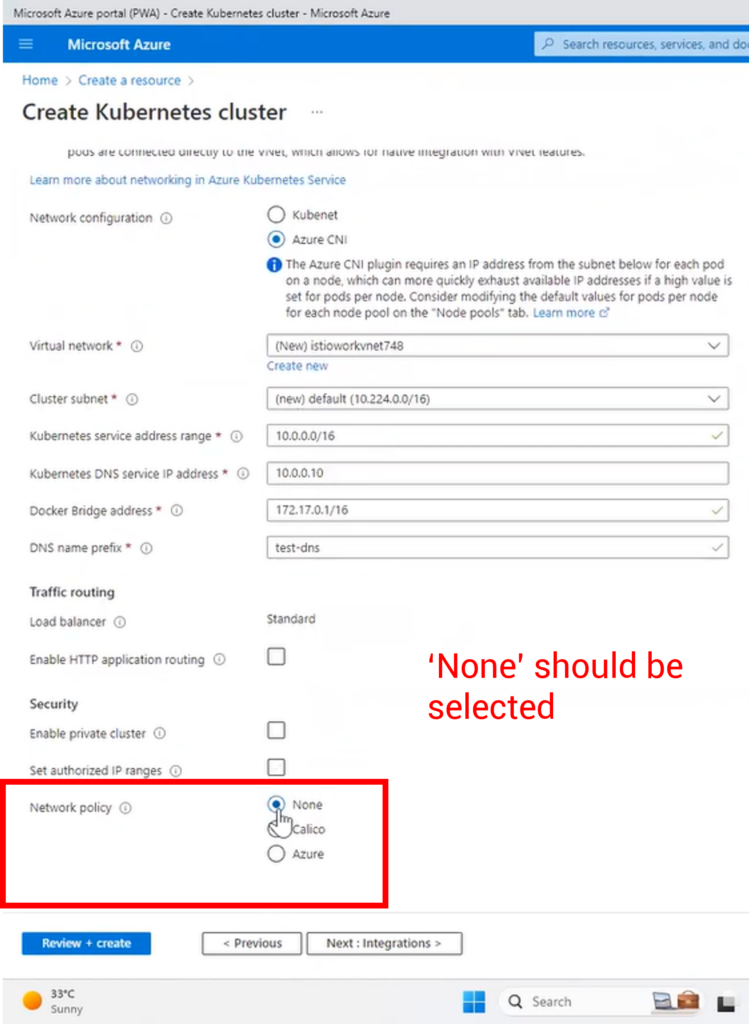

Note: The current version of Istio Ambient mesh (1.18.0v) is in alpha and a few features might not work and it may not 100% be stable for production. At this time of the blog, the current version of Ambient mesh is not working with Calico CNI for now, so accordingly make your change in Google Kubernetes and Azure Kubernetes (refer the image below).

Webinar on steps implementing Istio Ambient Mesh

If you want to skip the steps and watch the video for implementing Istio Ambient mesh in Google Cloud, here you go:

Steps to implement Istio ambient mesh

We will achieve the implementation of Istio ambient mesh with five major steps:

- Installation of Istio ambient mesh

- Creating and configuring services in Kubernetes cluster

- Implement Istio ambient mode and verify ztunnel and HBONE

- Enabling L4 authorization for services using ambient mesh

- Enabling L7 authorization for services using ambient mesh

Steps for installing Istio ambient mesh

Step-1: Download and extract Istio ambient mesh from the Git repo

You can go to Git repo https://github.com/istio/istio/releases/tag/1.18.0-alpha.0 and download and extract the Istio ambient mesh set up in your local system. ( I’ve used the Windows version). Add <extracted path of Istio installation package>/bin path to the environment path variable.

Step-2: Install Istio ambient mesh

Use the following command to install Istio ambient mesh to your cluster.

| istioctl install -set profile=ambient |

Istio will install the following components- Istio core, Istiod, Istio CNI, Ingress gateways, Ztunnel,

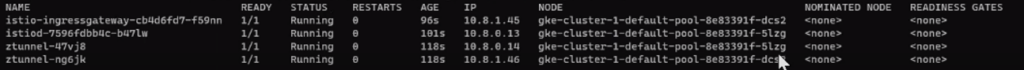

Step-3: Check if ztunnel and Istio CNI are installed at node level

After installation there will be a new namespace created named istio-system. You can check the pods by running the below command.

| kubectl get pods -n istio-system -o wide |

Since I have created two nodes, there are two ztunnel pods (daemonset) running here.

Similarly you can use the following command to verify if Istio CNI is installed at the node level, by using the following command.

| kubectl get pods -n kube-system |

Note: istio-cni is deployed in istio-system namespace in case of AKS.

Steps to create and configure services in Kubernetes cluster

Step-1: Create namespace, named ambient for deployments

kubectl create namespace ambientStep-2: Create two services in separate nodes.

I have used the following yaml for creating deployment.yaml, service.yaml and service-account.yaml. You can refer to the files in the Github repo: https://github.com/IMESHinc/webinar.

Code for demo-deployment-1.yaml.

| apiVersion: apps/v1 kind: Deployment metadata: name: echoserver-depl-1 namespace: ambient labels: app: echoserver-depl-1 spec: replicas: 2 selector: matchLabels: app: echoserver-app-1 template: metadata: labels: app: echoserver-app-1 spec: serviceAccountName: echo-service-account-1 containers: – name: echoserver-app-1 image: imeshai/echoserver ports: – containerPort: 80 |

Code for demo-service-1.yaml

| apiVersion: v1 kind: Service metadata: name: echoserver-service-1 namespace: ambient spec: selector: app: echoserver-app-1 ports: – port: 80 targetPort: 80 |

Code for demo-service-account-1.yaml

| apiVersion: v1 kind: ServiceAccount metadata: name: echo-service-account-1 namespace: ambient labels: account: echo-one |

Similarly you can create deployments, service and service-account files for creating the 2nd service.

Deploy two services in the Kubernetes cluster by using the command:

| kubectl apply -f demo-service-account-1.yaml kubectl apply -f demo-deployment-1.yaml kubectl apply -f demo-service-1.yaml |

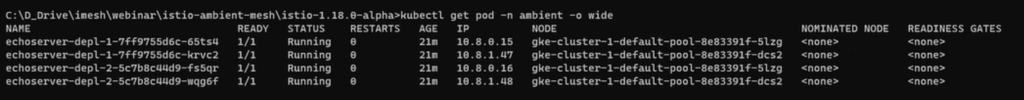

You can verify if your pods and svc are running by executing the following commands

kubectl get pods -n <<namespace>>

kubectl get svc -n <<namespace>>Note: Since I have selected two replicas for each service, Kubernetes automatically created the pods in each node to balance the loads. However, you can explicitly mention in the deployment yaml to create pods in two different nodes as well.

Step-3: Create Istio gateway and virtual services to allow external traffic to the newly created services

Once the two services are created, we can create an ingress gateway to allow internet traffic to the newly created services. ( The names of my services are echoserver-service-1 and echoserver-service-2 respectively).

I have created a demo-gateway.yaml file (code below) to link to Istio ingress gateway.

| apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: echoserver-gateway namespace: ambient spec: selector: istio: ingressgateway servers: – port: number: 80 name: http protocol: HTTP hosts: – “*” |

Code for Istio VirtualService yaml file to route the traffic to service1 and service2 if the URL would match ‘/echo1’ and ‘/echo2’ respectively.

| apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: echoserver-virtual-service namespace: ambient spec: hosts: – “*” gateways: – echoserver-gateway http: – match: – uri: exact: /echo1 route: – destination: host: echoserver-service-1 port: number: 80 – match: – uri: exact: /echo2 route: – destination: host: echoserver-service-2 port: number: 80 |

Apply the yaml files in the Kubernetes cluster to create Istio ingress gateway and virtual service objects.

You can check the status of Istio Ingress gateway resource in the Istio-system namespace by running the command.

kubectl get service -n istio-systemStep-4: Access the services from the browser

You can use the external IP address of the Istio gateway to access the services.

By default the communication will not go through the ztunnel of Istio ambient mesh. So we have to make it active by applying certain commands.

Steps to verify communication through ztunnel (mTLS) in ambient mesh

Step-0 (Optional): Log the ztunnel and Istio CNI

This is an optional step you can use to observe the logs of ztunnel and Istio CNI while transitioning of service communication to Istio ambient mode, you can apply these commands:

| kubectl logs -f <<istio-cni-pod-name>> -n kube-system |

| kubectl logs -f <<ztunnel-pod-name>> -n istio-system |

Step-1: Apply ambient mesh to the namespace

You need to apply Istio Ambient mesh to the namespace by using the following command:

| kubectl label namespace ambient istio.io/dataplane-mode=ambient |

Both the services would be a part of the Istio ambient service mesh now. You can verify by again accessing them from the browser.

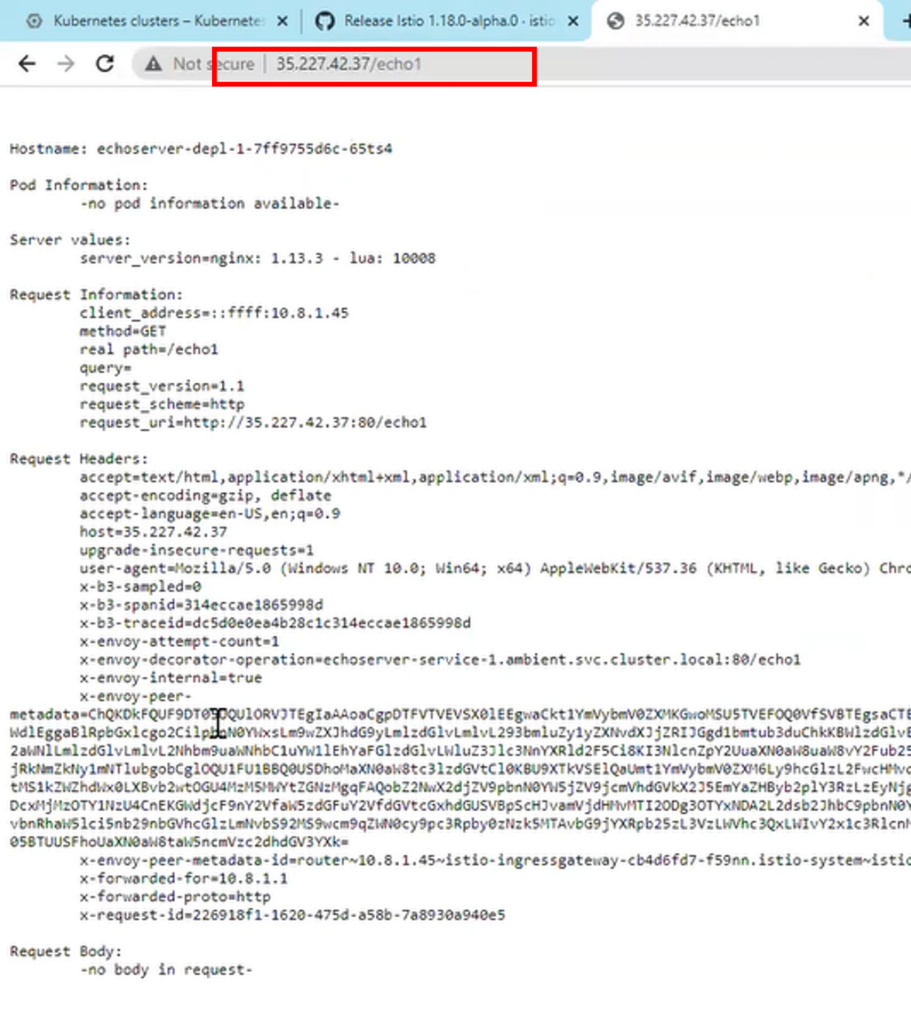

Step-2: Verify the communication through ztunnel of external traffic

If you login to the browser and try to access the services (echoserver-service-1 and 2 for me), you will see the communication is already happening through the ztunnel.

Step-3: Verify the HBONE of service-to-service communication

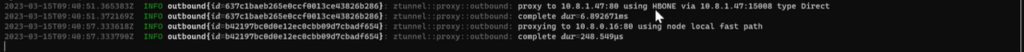

You can also verify if your service-to-service communication is secured by letting one pod to communicate with another ( and then check the logs of ztunnel pods).

Log into one of the pods of a service (say echoserver-service-1) and use bash to send requests to another service (say echoserver-service-2).

You can use the following command to go to bash of one pod:

| kubectl exec -it <<pod name of service-1>> -n <<namespace>> –- bash |

Use curl to send request to another services

| curl <<service-2>> |

You will see the in the logs of one of ztunnel pods that the communication is already happening over the HBONE (a secure overlay tunnel for communication between two pods in different nodes).

Step-4: Verification of mTLS-based communication in service-to-service communication

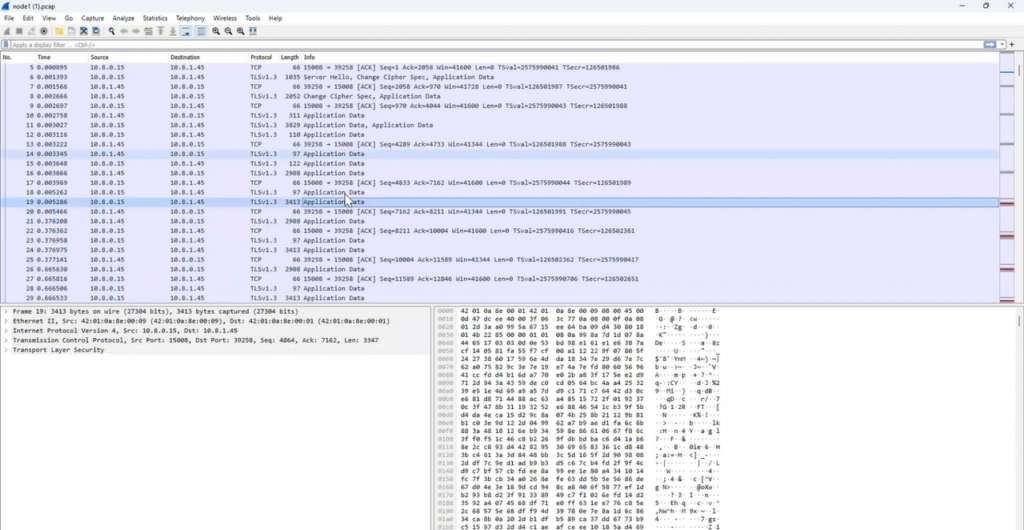

Connect to ssh of one of the nodes to dump TCP packets and analyze the traffic request; we will understand if the communication between two nodes is going through the secure channel or not.

Execute the following command in the node-ssh: (15008 port is used for HBONE communication in Istio ambient mesh). We will write the logs into node1.pcap

| sudo tcpdump -nAi ens4 port 9080 or port 15008 -w node1.pcap |

You can curl a service from one pod and check the node logs (download node1.pcap file), and when you open the file in the network analyzer, it would show something like below:

You will observe that all the application data exchanged between the two nodes are secured and using mTLS encryption.

Steps to create L4 authorization policies in Istio ambient mesh

Step-1: Create an authorization policy yaml in Istio

Create a demo-authorization-L4.yaml file to write policies that would allow the public traffic to the service-1 containers only, and not from any other services. We have mentioned in the rules to allow the traffic from Istio ingress controller.

| apiVersion: security.istio.io/v1beta1 kind: AuthorizationPolicy metadata: name: echoserver-policy namespace: ambient spec: selector: matchLabels: app: echoserver-app-1 action: ALLOW rules: – from: – source: principals: [“cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account”] |

Use the command to apply the yaml file.

| kubectl apply -f demo-authorization-L4.yaml |

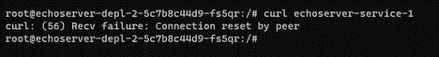

Note: Once you try to reach our service-1 ( echoserver-service-1) from the browser then you can access it without any problem. But if you curl from one of the pod of service-2, it would fail (refer the screenshot).

Steps to create L7 authorization policies using waypoint proxy

For L7 authorization policies we have to create a way-point proxy. The waypoint proxy can be configured using K8s gateway API. Note, by default the gateway API CRDs might not be available in most of the cloud providers, so we need to install it.

Step-1: Download Kubernetes gateway API CRDs

Use the command to download gateway API CRDs using Kustomize.

| kubectl kustomize “github.com/kubernetes-sigs/gateway-api/crd?ref=v0.6.1” > gateway-api.yaml |

Step-2: Apply Kubernetes gateway API

Use the command to apply gateway API CRDs.

| kubectl apply -f gateway-api.yaml |

Step-3: Create waypoint proxy of Kubernetes gateway API kind

We can create a waypoint proxy of gateway API with a yaml file. You can use the demo-waypoint-1.yaml. We have basically created a waypoint proxy for service-1 (echoserver-service-1).

| apiVersion: gateway.networking.k8s.io/v1beta1 kind: Gateway metadata: name: echoserver-gtw-1 namespace: ambient annotations: istio.io/for-service-account: echo-service-account-1 spec: gatewayClassName: istio-waypoint listeners: – allowedRoutes: namespaces: from: Same name: imesh.ai port: 15008 protocol: ALL |

And apply this to the K8s cluster.

| kubectl apply -f demo-waypoint-1.yaml |

Step-4: Create L7 authorization policy to declare the waypoint proxy for traffic

Create L7 authorization policy to define rules when to apply the waypoint proxy (echoserver-gtw-1) for traffic. You can use the following demo-authorization-L7.yaml file to write the policy.

| apiVersion: security.istio.io/v1beta1 kind: AuthorizationPolicy metadata: name: echoserver-policy namespace: ambient spec: selector: matchLabels: istio.io/gateway-name: echoserver-gtw-1 action: ALLOW rules: – from: – source: principals: [“cluster.local/ns/istio-system/sa/istio-ingressgateway-service-account”] to: – operation: methods: [“GET”] |

Use the command to apply the yaml file.

kubectl apply -f demo-authorization-L7.yamlStep-5: Verify the L7 authorization policy

As we have created a waypoint proxy for service-1 and applied a policy to allow all traffic from the Istio ingress gateway, you will see you can still access service-1 (echoserver-service-1) from the browser.

However, if you want to access service-1 from one of the pods of service-2 ( echoserver-service-2), the waypoint proxy will not allow the traffic as per the policy (refer the screenshot below).

Conclusion

Ambient mesh is very cost-efficient and less resource intensive in applying Istio in a staggered manner. We feel there will be more implementation of Istio after the ambient version.

If you want to adopt enterprise Istio for your project and adopt it without any operation hassle, please feel free to talk to an Istio expert or book an Istio service mesh pilot.

About IMESH

IMESH offers solutions to help you avoid errors during the experimentation of implementing Istio and fend off operational issues. IMESH provides a platform built on top of Istio and Envoy API gateway to help start with Istio from Day-1. IMESH Istio platform is hardened for production and is fit for multicloud and hybrid cloud applications. IMESH also provides consulting services and expertise to help you adopt Istio rapidly in your organization.

IMESH also provides a strong visibility layer on top of Istio which provides Ops and SREs a multicluster view of services, dependencies, and network traffic. The visibility layer also provides details of logs, metrics, and traces to help Ops folks to troubleshoot any network issues faster.