It makes sense for DevOps engineers and architects to perform canary deployments in their CI/CD workflows. They cannot skip testing a release for the sake of adhering to continuous delivery practices, can they?

In canary deployments, the new version, called canary, is tested with limited live traffic at first. Ops teams and SREs then observe and analyze the performance and customer experience of the canary, before gradually rolling it out for the larger audience in case of no issues.

The crucial part of a canary deployment is to split the live traffic and route a small portion of it to the canary. Architects and DevOps teams need the best tool to carry out such traffic splitting between services. Of course, API gateways can do it. But it is difficult to split traffic between internal services or subsets with API gateways.

This is where the open-source Istio service mesh comes in. Istio provides 5 traffic management API resources to handle traffic from the edge and also between service subsets:

- Virtual services

- Destination rules

- Gateways

- Service entries

- Sidecars

Out of all the above resources, virtual services and destination rules form the core of Istio’s traffic routing features. Canary deployment is just one of the use cases they provide. Let us explore the resources, like what virtual services and destination rules are, and how they work.

But before we begin, let us understand how Istio routes and load balance traffic by default.

A quick introduction to Istio Envoy proxy load balancing

Istio uses Envoy proxy as its data plane. Envoy proxy runs as a sidecar container with each application pod and intercepts the traffic going in and out of the pod.

Istio’s Envoy proxy uses the least requests model to load balance traffic by default. That means, two random service instances (pods) are selected from a service’s load balancing pool (or replicas) and the traffic is routed to the pod that has fewer active requests to serve. This prevents service instances or pods from request overloading and ensures effective utilization of resources.

Istio works fine with the default load-balancing model. However, there are circumstances where you want to configure certain rules. For example, consider the below scenarios:

- You want to change the default routing policy into a weighted or round-robin model.

- You want to limit the number of simultaneous connections or requests to upstream services.

- You want to set an outlier detection policy to eject unhealthy pods for a certain amount of time to keep the infrastructure resilient.

- You would like to A/B test between two versions of services — split traffic and route a certain percentage of them to the new version.

These are some instances where DevOps and cloud architects can use `VirtualServices` and `DestinationRules`.

What are Istio virtual services?

Istio virtual service is a Kubernetes custom resource definition (CRD) that defines the routing rules for traffic within a mesh. Virtual service routes requests to respective destinations if they meet the matching criteria defined in the `VirtualService` YAML file.

The matching conditions can be applied to any parameters in the HTTP messages, such as URIs, traffic ports, header fields, etc. Istio virtual service resource supports HTTP/1.1, HTTP2, and gRPC traffic.

Sample VirtualService YAML

The below `VirtualService` YAML will route traffic with the URIs `/istio-support` and `/istio-implementation` addressing the host `imeshi.ai`, to `istio-support` and `istio-implementation` services, respectively.

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: imesh-route

spec:

hosts:

- imesh.ai

http:

- match:

- uri:

prefix: /istio-support

route:

- destination:

host: istio-support

- match:

- uri:

prefix: /istio-implementation

route:

- destination:

host: istio-implementationBelow are some parameters for virtual service resources to create the rules for runtime traffic:

- hosts: In the YAML, `hosts` field is the destination host (DNS name, IP address, or FQDN) that the routing rules apply to. The `hosts` is set to `imesh.ai` in the above example, so the `VirtualService` will only apply to requests addressing that host.

- http: The `http` section defines the virtual service’s routing rules for traffic to `hosts`. It contains the match conditions and the destination where the traffic should go based on those conditions. Apart from HTTP, virtual services can route TCP and unterminated TLS traffic.

- match: The `match` field sets conditions that the incoming request has to satisfy for it to be routed to the destination service. This can be based on URIs, HTTP headers, methods, source IP addresses, etc. Here, the `match` has `uri` subfields with `prefix` attributes that match the URIs of incoming requests, which are `/istio-support` and `/istio-implementation` respectively.

- destination: The `destination` defined under the `route` section specifies the actual destination for traffic that matches the respective conditions. For effective traffic distribution, the destination service should exist in Istio’s service registry, either by default (Envoy proxy) or added using a service entry. The destination can also be a service subset.

The above fields show only a gist of routing rules that DevOps and cloud engineers can configure with virtual services. To see the comprehensive list, head to Istio / Virtual Service.

What are Istio destination rules?

Istio destination rule is another Kubernetes CRD that defines rules for the traffic routed after evaluating virtual service configurations. In other words, `DestinationRule` defines what happens to the traffic routed to a given destination. Destination rules let users customize the following traffic policies of Envoy proxy:

- Load balancing model

- Connection pool size from the sidecar

- Outlier detection settings

- TLS security mode

- Circuit breaking settings

Sample DestinationRule YAML

We configured the above `VirtualService` to route requests to `imesh.ai/istio-support` to `istio-support` service. Assuming that we have two subsets of `istio-support` deployed, then this is how the `DestinationRule` YAML would look like:

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: istio-support-destination

spec:

host: istio-support

subsets:

- name: istio-support-v1

labels:

version: v1

- name: istio-support-v2

labels:

version: v2

trafficPolicy:

loadBalancer:

simple: ROUND_ROBINThe traffic to `istio-support` service will load balance between the two subsets (`istio-support-v1` and `istio-support-v2`) using the round robin algorithm, given that they are mentioned in the corresponding `VirtualService` since the routing is defined there.

Typically, both virtual services and destination rules work together to apply policies and send traffic to their respective destinations. But sometimes, they do not need each other:

- If the service has only one version and there are no granular routing requirements such as load balancing policy, connection pool settings, etc., `VirtualService` alone will be enough.

- `DestinationRule` independently is sufficient if you only need to apply traffic policies like load balancing policy or circuit breaking to specific services.

How do virtual services and destination rules work?

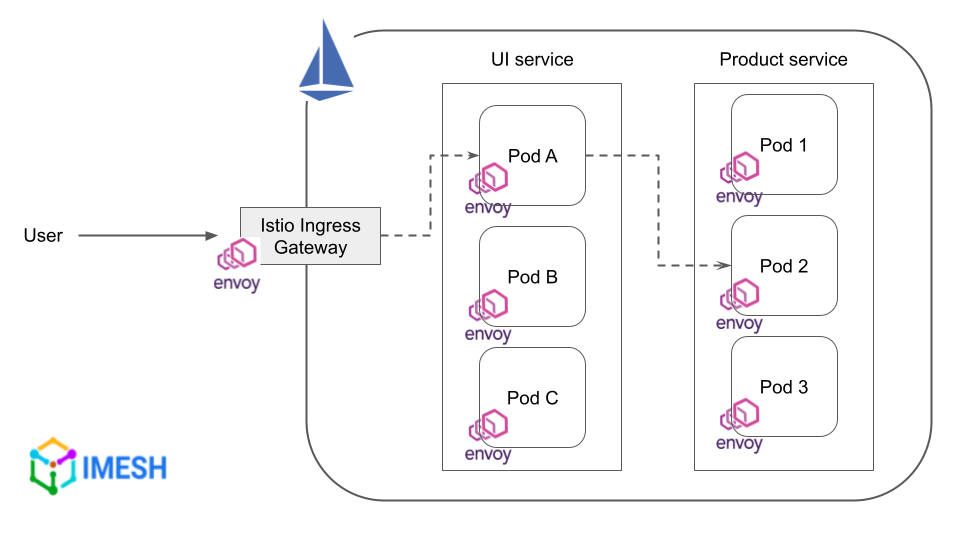

The control plane component of Istio, called istiod, applies virtual services and destination rules to all data plane proxies by default. However, note that it is the Envoy proxy at the source which controls the routing rules and not the one at the destination.

For example, if the traffic coming from `UI` service (`pod A`) to `product` service (`pod 2`) needs to have retry logic configured (refer to the image below), the proxy of `pod A` controls this configured behavior, not the proxy of `pod 2`.

Many DevOps and cloud engineers who try to understand virtual services and destination rules fall into the trap of believing that they are controlled by the destination proxy. But if you look at why it is not the case, it is all very logical:

- The sidecar proxy of `pod 2` is only aware of its own application container, `pod 2`. It is not aware of the containers of `pod 1` or `pod 3`. So if the request to `pod 2` keeps on failing, `pod 1` or `pod 3` still will not serve that request.

In the case of `UI` service, it sits outside `product` service’s cluster and is fully aware of the services (`pod 1`, `pod 2`, `pod 3`) in the cluster. (Note that cluster here means a set of similar services, not Kubernetes cluster.) So, `UI` service’s proxy can route traffic to `pod 1` or `pod 3` when a request to `pod 2` fails. - Almost similar is the case if you configure traffic splitting between services. For example, if you want 25% of the traffic from the `UI` to go to `pod 1` and 10% to `pod 2`, Envoy proxies of the `UI` pods control that rule.

The proxy of `pod 2` cannot do anything because it is the destination and the traffic has already reached there. It cannot forward the traffic to other service instances. At the same time, `UI` service’s pods that sit outside can see and control the behavior of traffic to the cluster, including forwarding requests based on weights. - The same happens with routing to subsets (in the least-request load balancing model, for example) using `DestinationRule`. To send traffic to subsets with the least active requests, the proxy needs to monitor traffic to the pods in the cluster. And it can only be done by the proxy outside the cluster.

- Now, assume that there is a new service called `reviews`. It is not deployed in an Istio-enabled namespace and thus does not have a sidecar proxy. If `reviews` sends traffic to an Istio-enabled service, you cannot configure any routing rules on the requests since the source does not have the proxy to apply virtual services or destination rules.

Always remember: Envoy proxy at the source controls the routing rules, not the one at the destination.

(If you are interested to see a demo of how to implement canary using Istio, check this out: How to implement Canary for Kubernetes apps using Istio)

Features and use cases of Istio virtual services and destination rules

Virtual services and destination rules help in applying granular routing rules. Besides, they provide features to test and ensure network resiliency, so that applications operate reliably.

Below are some features and use cases of virtual services and destination rule:

- Canary deployments

- Blue/green deployments

- Timeouts

- Retries

- Fault injection

- Circuit breakers

- Mirroring

We have covered each of them here: Traffic Management and Network Resiliency with Istio Service Mesh.

About IMESH

IMESH helps enterprises adopt Istio and Envoy without any implementation or operational hassle. By implementing Istio for your cloud applications from day 1, we save DevOps and cloud architects from hours of toil and experimentation to learn Istio.

Book a free 30-mins consultation with one of our Istio experts, or contact us if you need enterprise Istio support.