Why is Envoy Proxy required?

Challenges are plenty for organizations moving their applications from monolithic to microservices architecture. Managing and monitoring the sheer number of distributed services across Kubernetes and public cloud often exhausts app developers, cloud teams, and SREs. Below are some of the major network-level operational hassles of microservices, which shows why Envoy proxy is required.

Lack of secure network connection

Kubernetes is not inherently secure because services are allowed to talk to each other freely. It poses a great threat to the infrastructure since an attacker who gains access to a pod can move laterally across the network and compromise other services. This can be a huge problem for security teams, as it is harder to ensure the safety and integrity of sensitive data. Also, the traditional perimeter-based firewall approach and intrusion detection systems will not help in such cases.

Complying with security policies is a huge challenge

There is no developer on earth who would enjoy writing security logic to ensure authentication and authorization, instead of brainstorming business problems. However, organizations who want to adhere to policies such as HIPAA or GDPR, ask their developers to write security logic such as mTLS encryption in their applications. Such cases in enterprises will lead to two consequences: frustrated developers, and security policies being implemented locally and in siloes.

Lack of visibility due to complex network topology

Typically, microservices are distributed across multiple Kubernetes clusters and cloud providers. Communication between these services within and across cluster boundaries will contribute to a complex network topology in no time. As a result, it becomes hard for Ops teams and SREs to have visibility over the network, which impedes their ability to identify and resolve network issues in a timely manner. This will lead to frequent application downtime and compromised SLA.

Complicated service discovery

Services are often created and destroyed in a dynamic microservices environment. Static configurations provided by old-generation proxies are ineffective to keep track of services in such an environment. This makes it difficult for application engineers to configure communication logic between services. Because they have to manually update the configuration file whenever a new service is deployed or deleted. It leads to application developers spending more of their time configuring the networking logic rather than coding the business logic.

Inefficient load balancing and traffic routing

It is crucial for platform architects and cloud engineers to ensure effective traffic routing and load balancing between services. However, it is a time-consuming and error-prone process for them to manually configure routing rules and load balancing policies for each service, especially when they have a fleet of them. Also, traditional load balancers with simple algorithms would result in inefficient resource utilization and suboptimal load balancing in the case of microservices. All these lead to increased latency, and service unavailability due to improper traffic routing.

With the rise in the adoption of microservices architecture, there was a need for a fast, intelligent proxy that can handle the complex service-to-service connection across the cloud.

Introducing Envoy proxy

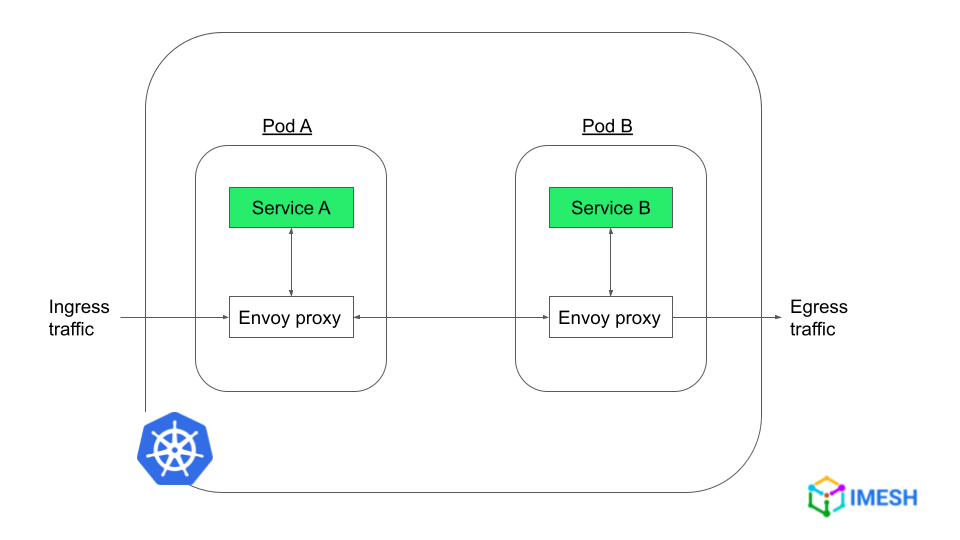

Envoy is an open-source edge and service proxy, originally developed by Lyft to facilitate their migration from a monolith to cloud-native microservices architecture. It also serves as a communication bus for microservices (refer to fig. A below) across the cloud, enabling them to communicate with each other in a rapid, secure, and efficient manner.

Envoy proxy abstracts network and security from the application layer to an infrastructure layer. This helps application developers simplify developing cloud-native applications by saving hours spent on configuring network and security logic.

Envoy proxy provides advanced load balancing and traffic routing capabilities that are critical to run large, complex distributed applications. Also, the modular architecture of Envoy helps cloud and platform engineers to customize and extend its capabilities.

Fig A – Envoy proxy intercepting traffic between services

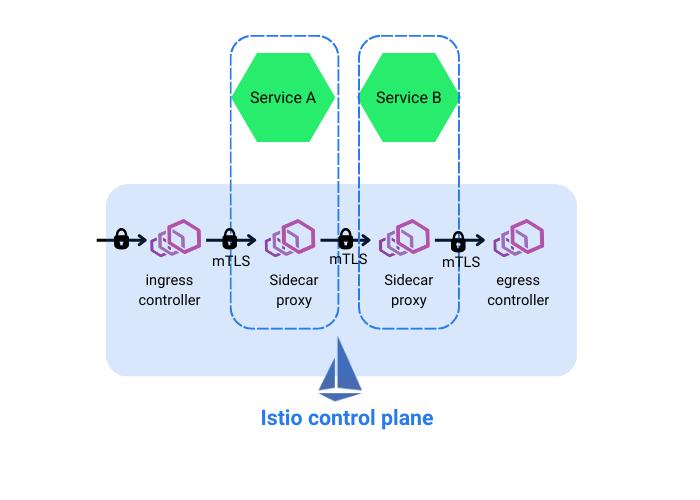

Envoy proxy architecture with Istio

Envoy proxies are deployed as sidecar containers alongside application containers. The sidecar proxy then intercepts and takes care of the service-to-service connection (refer to fig B below) and provides a variety of features. This network of proxies is called a data plane, and it is configured and monitored from a control plane provided by Istio. These two components together form the Istio service mesh architecture, which provides a powerful and flexible infrastructure layer for managing and securing microservices.

Fig B – Istio sidecar architecture with Envoy proxy data plane

Envoy proxy features

Envoy proxy offers the following features at a high level. (Visit Envoy docs for more information on the features listed below.)

- Out-of-process architecture: It means that the Envoy proxy runs independently as a separate process, apart from the application process. It can be deployed as a sidecar proxy and also as a gateway without requiring any changes to the application. Envoy is also compatible with any application language like Java or C++, which provides greater flexibility for application developers.

- L3/L4 and L7 filter architecture: Envoy supports filters and allows customizing traffic at the network layer (L3/L4) and at the application layer ( L7). This allows for more control over the network traffic and offers granular traffic management capabilities such as TLS client certificate authentication, buffering, rate limiting, and routing/forwarding.

- HTTP/2 and HTTP/3 support: Envoy supports HTTP/1.1, HTTP/2, and HTTP/3 (currently in alpha) protocols. This enables seamless communication between clients and target servers using different versions of HTTP.

- HTTP L7 routing: Envoy’s HTTP L7 routing subsystem can route and redirect requests based on various criteria, such as path, authority, and content type. This feature is useful for building front/edge proxies and service-to-service meshes.

- gRPC support: Envoy supports gRPC, a Google RPC framework that uses HTTP/2 or above as its underlying transport. Envoy can act as a routing and load balancing substrate for gRPC requests and responses.

- Service discovery and dynamic configuration: Envoy supports service discovery and dynamic configuration through a layered set of APIs that provide dynamic updates about backend hosts, clusters, routing, listening sockets, and cryptographic material. This allows for centralized management and simpler deployment, with options for DNS resolution or static config files.

- Health checking: For building an Envoy mesh, service discovery is treated as an eventually consistent process. Envoy has a health checking subsystem that can perform active and passive health checks to determine healthy load balancing targets.

- Advanced load balancing: Envoy’s self-contained proxy architecture allows it to implement advanced load balancing techniques, such as automatic retries, circuit breaking, request shadowing, and outlier detection, in one place, accessible to any application.

- Front/edge proxy support: Using the same software at the edge provides benefits such as observability, management, and identical service discovery and load balancing algorithms. Envoy’s feature set makes it well-suited as an edge proxy for most modern web application use cases, including TLS termination, support for multiple HTTP versions, and HTTP L7 routing.

- Best-in-class observability: Envoy provides robust statistics support for all subsystems and supports distributed tracing via third-party providers, making it easier for SREs and Ops teams to monitor and debug problems occurring at both the network and application levels.

Given its powerful set of features, Envoy proxy has become a popular choice for organizations to manage and secure microservices. In practice, it has two main use cases.

Use cases of Envoy proxy

Envoy proxy can be used as both a sidecar service proxy and a gateway.

Envoy sidecar proxy

As we have seen in the Isito architecture, Envoy proxy constitutes the data plane and manages the traffic flow between services deployed in the mesh. The sidecar proxy provides features such as service discovery, load balancing, traffic routing, etc., and offers visibility and security to the network of microservices.

Envoy Gateway as API

Envoy proxy can be deployed as an API gateway and as an ingress (read the Envoy Gateway project). Envoy Gateway is deployed at the edge of the cluster to manage external traffic flowing into the cluster and between multicloud applications (north-south traffic). Envoy Gateway helped application developers who were toiling to configure Envoy proxy (Istio-native) as API and ingress controller, instead of purchasing a third-party solution like NGINX. With its implementation, they have a central location to configure and manage ingress and egress traffic, and apply security policies such as authentication and access control.

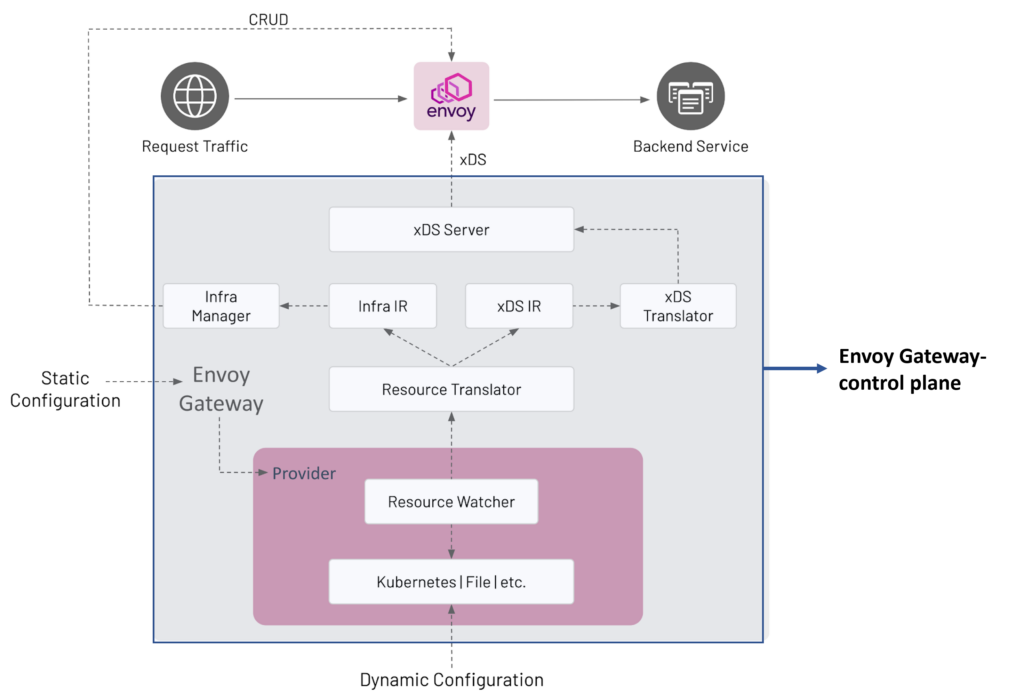

Below is a diagram of Envoy Gateway architecture and its components.

Envoy gateway architecture (source: gateway.envoyproxy.io)

To read more about Envoy API gateway architecture, features, and learn how to get started with it, follow this link: What is Envoy Gateway, and why is it required for Kubernetes? – IMESH

Benefits of Envoy proxy

Envoy’s ability to abstract network and security layers offers several benefits for IT teams such as developers, SREs, cloud engineers, and platform teams. Following are a few of them.

Effective network abstraction

The out-of-process architecture of Envoy helps it to abstract the network layer from the application to its own infrastructure layer. This allows for faster deployment for application developers, while also providing a central plane to manage communication between services.

Fine-grained traffic management

With its support for the network (L3/L4) and application (L7) layers, Envoy provides flexible and granular traffic routing, such as traffic splitting, retry policies, and load balancing.

Ensure zero trust security at L4/L7 layers

Envoy proxy helps to implement authentication among services inside a cluster with stronger identity verification mechanisms like mTLS and JWT. You can achieve authorization at the L7 layer with Envoy proxy easily and ensure zero trust. (You can implement AuthN/Z policies with Istio service mesh — the control plane for Envoy.)

Control east-west and north-south traffic for multicloud apps

Since enterprises deploy their applications into multiple clouds, it is important to understand and control the traffic or communication in and out of the data centers. Since Envoy proxy can be used as a sidecar and also an API gateway, it can help manage east-west traffic and also north-south traffic, respectively.

Monitor traffic and ensure optimum platform performance

Envoy aims to make the network understandable by emitting statistics, which are divided into three categories: downstream statistics for incoming requests, upstream statistics for outgoing requests, and server statistics for describing the Envoy server instance. Envoy also provides logs and metrics that provide insights into traffic flow between services, which is also helpful for SREs and Ops teams to quickly detect and resolve any performance issues.

Get started with Envoy Proxy

Below are some resources to help you get started with Envoy Proxy.

How to set up Envoy Proxy in Linux

The following video will give you a high-level overview of Envoy architecture and components such as listeners, network chain filters, routers, and clusters. It will be followed by a demo of installing Envoy on Ubuntu. You will also see a sample flask application and how Envoy configuration is written to define all the components.

Deploying Envoy in K8s and Configuring as Load Balancer

This video discusses different deployment types and their use cases, and it shows a demo of Envoy deployment into Kubernetes and how to set it as a load balancer (edge proxy).

About IMESH

IMESH offers solutions to help organizations adopt Istio service mesh without any implementation or operational hassle. IMESH provides a platform built on top of Istio and Envoy API gateway to help start with Istio from Day 1. The platform is hardened for production and is fit for multicloud and hybrid cloud applications.

IMESH also provides consulting services and expertise to help you adopt Istio rapidly in your organization. We make it easier to deploy Istio into production and ensure there are no unintended container crashes or application misbehavior. IMESH also offers a strong visibility layer on top of Istio, which provides Ops and SREs with a multicluster view of services, dependencies, and network traffic. If you are interested, please talk to an Istio expert or book an Istio demo.