We discussed the 3 R’s migration strategy to switch from Ingress to Gateway API. This blog will walk you through its demo. The demo will show you how to gradually shift traffic from Ingress and implement Kubernetes Gateway API in your cluster.

I will use Gateway and HTTPRoute resources of the Gateway API. As a quick recap:

- Gateway is used to get the traffic inside the cluster

- HTTPRoute CRD is used to apply routing rules, matches, filters, etc., on incoming traffic.

You will also see header injection and path-based matching with Gateway API.

Steps to implement Kubernetes Gateway API

I will follow the given 3-step process for the demo to implement Gateway API:

- Step #0: Demo overview and prerequisites

- Step #1: Deploy the banking application and Ingress

- Step #2: Deploy Gateway and HTTPRoute CRDs of Gateway API

- Step #3: Weighted traffic distribution between Ingress and Gateway API

Step #0: Demo overview and prerequisites

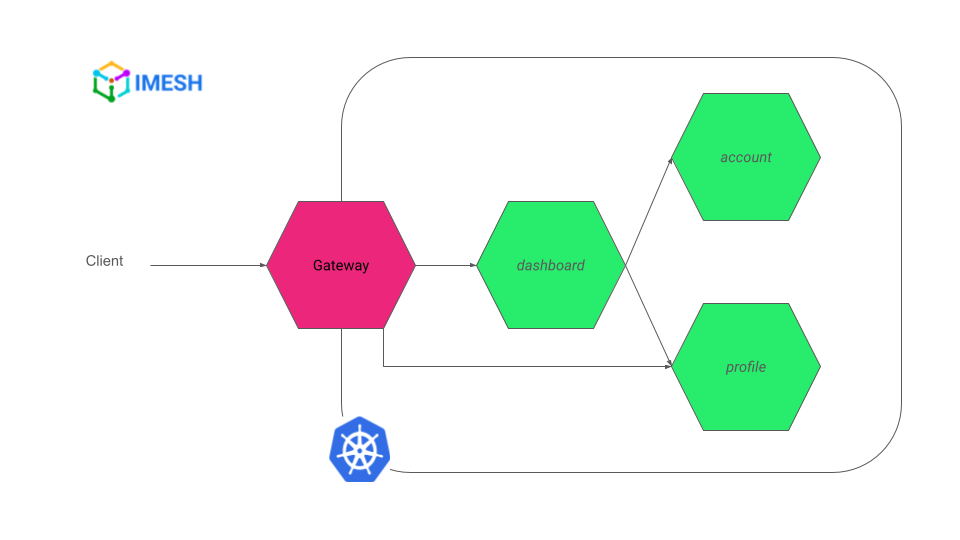

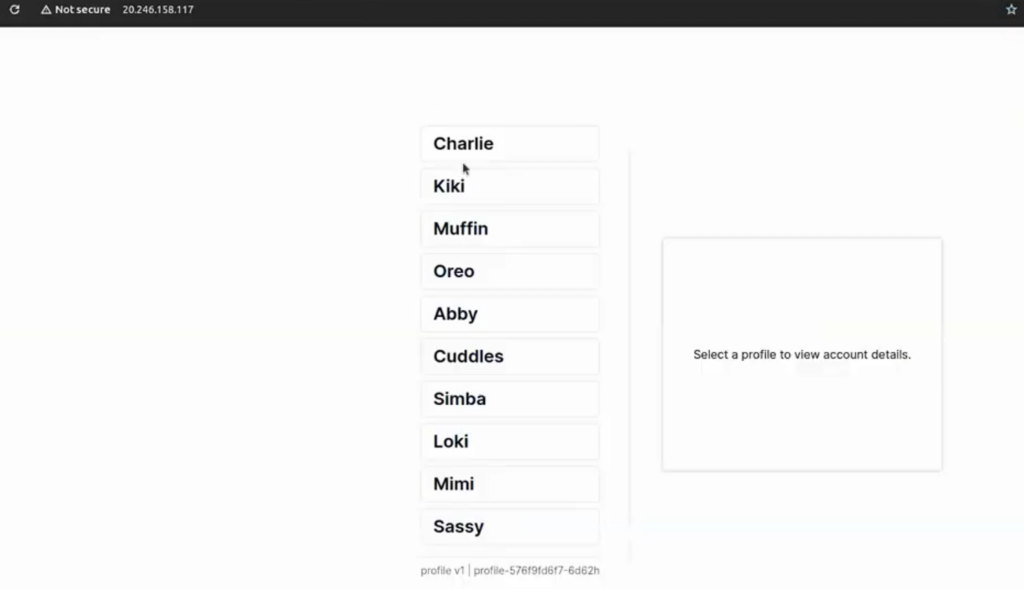

I will start by deploying the banking application, which has dashboard, account, and profile services (see Fig.A).

Fig.A – Banking application with dashboard, account, and profile services

I will then expose the dashboard and profile services using Ingress. Later, you will see how to use K8s Gateway API CRDs (Gateway and HTTPRoute) to route traffic to the same services and then gradually shift the traffic from Ingress to Gateway API.

The only prerequisite for the demo is to have the Nginx controller and Istio Ingress configured in the cluster. Check out the blog, How to get started with Istio in Kubernetes in 5 steps, to get started with Istio.

I will use the Nginx controller for Ingress, and Istio Ingress will implement Gateway API CRDs. I will also add the name of the respective controller to the request header to identify the controller. This will be helpful in step #3.

All the YAMLs used in the demo are in the IMESH GitHub repo. You can also watch the demo in action in the video below:

Step #1: Deploy the banking application and Ingress

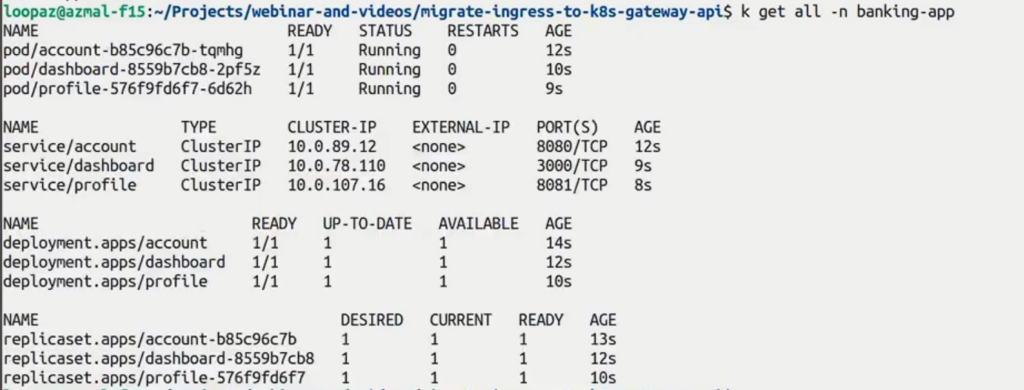

Create a banking-app namespace and deploy the banking application. Use the following command to see if the services are up and running:

kubectl get all -n banking-app

First, I will expose the profile service using the following Ingress configuration:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: banking-app-profile-ingress

namespace: banking-app

annotations:

service.beta.kubernetes.io/port_80_no_probe_rule: "true"

service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path: /

nginx.ingress.kubernetes.io/server-snippet: |

more_set_headers "controller: NGINX";

spec:

ingressClassName: "nginx"

rules:

- http:

paths:

- path: /users

pathType: Prefix

backend:

service:

name: profile

port:

number: 8081You can see that Nginx is set as the Ingress controller from ingressClassName: “nginx”. The path field defines that whenever a request comes to /users path, it will be forwarded to the profile service.

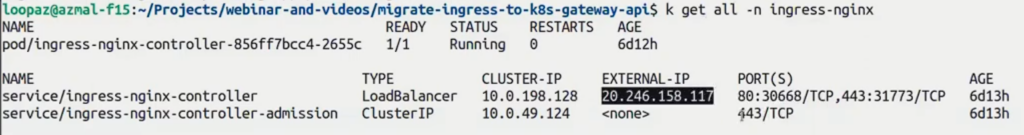

Once you apply the Ingress configuration, you can verify it by trying to reach the service with a curl command. Use the controller’s IP address, which you can find using the following command, to curl the profile service:

kubectl get all -n your_ingress_nginx_namespace

Curl profile service:

curl -v your_controller_ip/usersYou will see a response as the following:

Similarly, you can apply the below Ingress configuration to expose the dashboard service:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: banking-app-dashboard-ingress

namespace: banking-app

annotations:

service.beta.kubernetes.io/port_80_no_probe_rule: "true"

service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path: /

nginx.ingress.kubernetes.io/server-snippet: |

more_set_headers "controller: NGINX";

spec:

ingressClassName: "nginx"

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: dashboard

port:

number: 3000You can verify it by entering the controller IP in the browser, which will open the dashboard:

So, we have successfully deployed the banking application, and exposed profile and dashboard services using Nginx Ingress. Let us now deploy and use Gateway API CRDs to expose the same services.

Step #2: Deploy Gateway and HTTPRoute CRDs of Gateway API

Use the following Gateway configuration and deploy it:

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: banking-gateway

namespace: banking-app

annotations:

service.beta.kubernetes.io/port_80_no_probe_rule: "true" # FOR AZURE

spec:

gatewayClassName: istio

listeners:

- name: default

port: 80

protocol: HTTP

allowedRoutes:

namespaces:

from: AllI’m specifying Istio Ingress as the controller here (gatewayClassName: istio). The configuration opens a listener at port 80, and all the routes from any namespace can communicate with it.

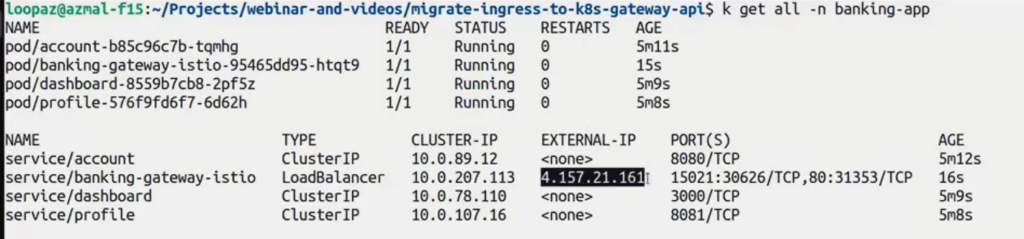

Note that we took the IP of the Ingress controller (nginx-ingress) to access the services exposed through Ingress in step #1. In Gateway API, the Gateway creates a new resource/pod with an external IP on its own.

You can use the following command to get the IP:

kubectl get all -n banking-app

The Gateway resource only opens a listener and does not know where to route the traffic it receives. This is where you can use HTTPRoute resources and define routing logic for the traffic hitting the Gateway.

Use the following HTTPRoute configuration to route traffic to the profile service:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: profile-route

namespace: banking-app

spec:

parentRefs:

- name: banking-gateway

rules:

- matches:

- path:

type: PathPrefix

value: /users

filters:

- type: ResponseHeaderModifier

responseHeaderModifier:

add:

- name: Controller

value: ISTIO

backendRefs:

- name: profile

port: 8081- The parentRefs field in the configuration specifies the Gateway to which the HTTPRoute should be attached. (An HTTPRoute can be attached to multiple Gateways.)

- The routing logic is the same as the Ingress one in step #1:

- any requests to the path /users will be routed to the profile service,

- and it uses Istio Ingress as the controller.

(Notice that in the Ingress configurations in step #1, I used annotations to add the controller name to the request header. Since the annotation is Nginx-specific, it is not portable and cannot be used with other controllers. However, in the Gateway API, the filters field — where I have specified the controller name to be added in the header — is a property of the HTTPRoute CRD. It is portable and can be used across a variety of Kubernetes Gateway API implementations.)

Similar to the route for profile service, apply the following HTTPRoute file to expose the dashboard service:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: dashboard-route

namespace: banking-app

spec:

parentRefs:

- name: banking-gateway

rules:

- filters:

- type: ResponseHeaderModifier

responseHeaderModifier:

add:

- name: Controller

value: ISTIO

backendRefs:

- name: dashboard

port: 3000You can use the Gateway resource’s external IP to verify Gateway API CRD implementation:

- Use curl -v your_Gateway_pod_ip/users to check the profile service

- Enter the IP on a browser to access the banking dashboard.

So far, we have implemented both Ingress and Kubernetes Gateway API CRDs, and they co-exist in the cluster. Now, let us load balance between them and gradually shift the traffic from Ingress to Gateway API.

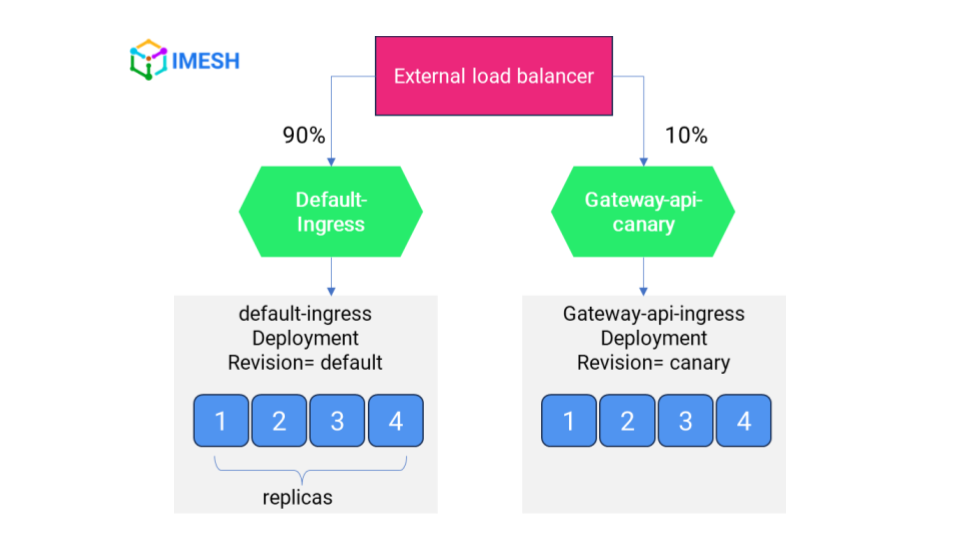

Step #3: Weighted traffic distribution between Ingress and Gateway API

To ensure a safe migration from Ingress to K8s Gateway API, start by shifting 10% of the traffic to Gateway API while Ingress serves 90% of the requests (see Fig.B). This step is helpful to check for errors and see if Gateway API can handle the load.

Fig.B – Weighted traffic distribution between Ingress (90) and Kubernetes Gateway API (10)

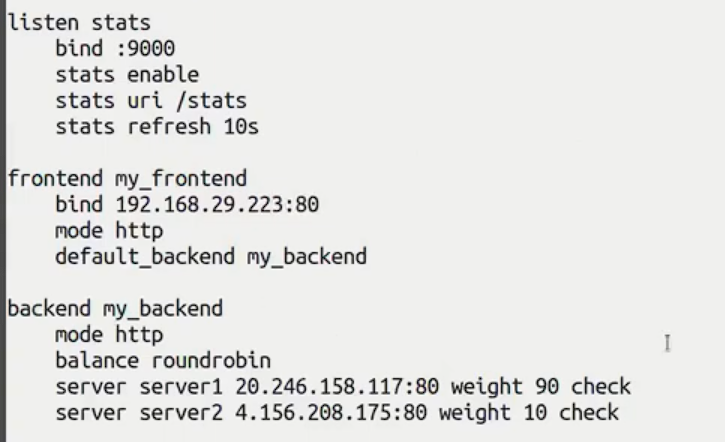

You can use any load balancer to implement weighted traffic distribution. I’m using HAProxy load balancer locally, for this demo. You can install it using any package manager.

First, modify the HAProxy configuration file to ensure you have the right configs. You can do that by entering the following command:

sudo vim /etc/haproxy/haproxy.cfg

- You can see that I have exposed a front end at port 80, which is using my WLAN adapter IP. The IP opens the banking dashboard in the browser.

- I have also specified 2 backends with respective traffic weights:

- server 1 represents Ingress and serves 90% of requests; server 2 represents Gateway API CRDs and they serve 10% of total traffic.

- Ensure that server 1 and server 2 have the IPs of the Ingress controller and Gateway pod, respectively.

After you save the configuration changes, reload HAProxy:

sudo systemctl reload haproxyNow, the load balancer with weighted traffic distribution has been applied. You can see the banking dashboard by entering the front-end IP in a browser.

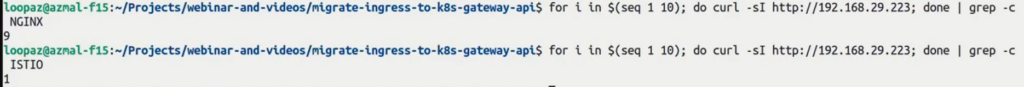

To verify the 90-10 traffic distribution between the Ingress controller and Gateway API, send 10 requests to the front end and watch for the header value using the following commands:

for i in $(seq 1 10) ; do curl sI http://your-lb-ip; done | grep -c NGINX

for i in $(seq 1 10) ; do curl sI http://your-lb-ip; done | grep -c ISTIO

See that the Nginx controller handles 9 requests and Istio Ingress receives only one. You can gradually increase the weight to Istio Ingress and implement K8s Gateway API completely.

Kubernetes Gateway API support and resources

DevOps and architects can benefit greatly by shifting from Ingress and implementing Kubernetes Gateway API. It involves multitenancy, specifications, advanced traffic management, and extensibility benefits. You can explore them in the blog, Kubernetes Gateway API vs Kubernetes Ingress.

At IMESH, we provide enterprise solutions for DevOps organizations migrating and adopting K8s Gateway API. We have videos and materials to help you understand and start with Gateway API:

- Getting Started With Kubernetes Gateway API Using Istio | Demo | IMESH

- Kubernetes Gateway API vs Ingress | IMESH | Demo

- Migrating from Ingress to Kubernetes Gateway API | The 3 R’s Strategy

Contact us if you need support with Gateway API adoption in your enterprise.