As Kubernetes continues to dominate enterprise infrastructure, networking performance becomes a critical concern, especially when using service meshes like Istio or implementing zero-trust policies with mTLS. Many DevOps and platform engineering teams are considering Cilium CNI. Earlier in our blog, we covered Cilium vs. Istio for network management. In this blog, we will compare two crucial CNIs (Cilium and AWS; now it is apples to apples) for defining security policies in your network.

In our latest IMESH experiment (conducted on 28th June 2025), we tested Cilium CNI against AWS VPC CNI in multiple realistic scenarios to measure latency trade-offs.

This blog delves into what we discovered and its implications for DevOps teams evaluating their CNI strategy.

Cilium vs AWS CNI: What We Wanted to Find Out?

While Cilium is gaining adoption due to its eBPF-based architecture, programmable security policies, and advanced observability, AWS VPC CNI remains the go-to for simplicity, compatibility, and predictable performance in Amazon EKS.

Our goal was to understand:

- How Cilium performs vs AWS CNI across different deployment setups

- What the latency looks like with and without Istio and mTLS

- When it’s worth switching to Cilium—and when it’s not

Test Setup & Methodology

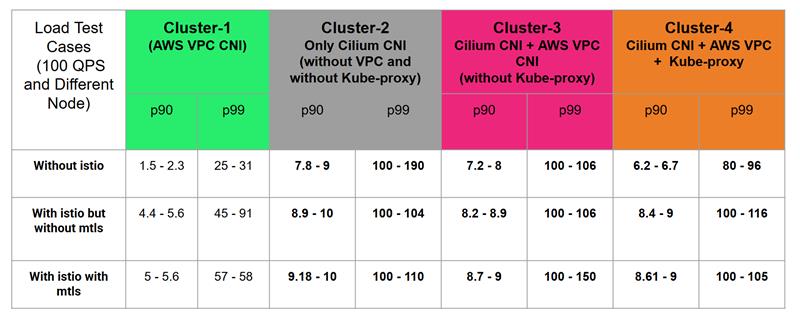

We used four clusters on AWS, each running a different network configuration:

- Cluster 1: AWS VPC CNI only

- Cluster 2: Cilium CNI only (no kube-proxy, no AWS VPC CNI)

- Cluster 3: Cilium CNI + AWS CNI (no kube-proxy)

- Cluster 4: Cilium CNI + AWS CNI + kube-proxy

Workload & Tools:

- Service: HTTPbin with one replica

- Load Generator: Fortio (v1.69.5), 100 QPS, 10 connections, for 10 seconds

- Node Specs: C7g.large (2 vCPU, 4 GB RAM)

- Istio version: 1.26.2

- Cilium version: 1.17.5

Each configuration was tested under three traffic patterns:

- Without Istio

- With Istio (no mTLS)

- With Istio + mTLS

Latency Results Snapshot (p90/p99 in ms)

The table below represents the p90 and p99 latency results after the test was carried out on AWS and Cilium CNI.

What the Data Tells Us About Cilium CNI and AWS CNI

- AWS VPC CNI consistently had the lowest latency across all traffic types, especially in the no-Istio scenario.

- Cilium-only setup had the highest latency, particularly under mTLS conditions.

- Hybrid approaches (Cilium + AWS CNI) marginally reduced latency, but not enough to outperform native AWS CNI.

- Adding kube-proxy to the mix (Cluster 4) slightly helped with p99 tail latency in some tests.

Features vs Performance: The Trade-off of Cilium CNI

| Feature | Cilium CNI | AWS VPC CNI |

| K8s Network Policy Support | Yes | Yes |

| Service-based Policy | Yes | No |

| Entity-based Policy | Yes | No |

| Protocol-agnostic | Yes | No |

| Service Account-based Policy | Yes | No |

| Compatibility with Istio | Partial | Full |

Although Cilium is rich in security and observability features via CRDs, it shows incompatibilities with Istio in specific Layer 7 policy scenarios (e.g., split-brain architecture and mTLS conflicts).

Our Recommendation on Cilium vs AWS CNI

If raw performance and compatibility are your highest priorities—especially when using Istio and mTLS—stick with AWS VPC CNI. It offers predictable low-latency communication with full support for Istio service mesh.

That being said, Cilium is a powerful tool for enhancing network visibility and enforcing policies. You can start by layering Cilium in policy-only mode or as a secondary CNI for selected namespaces, thereby benefiting from its strengths without introducing significant latency overhead.

Final Thoughts

Latency is just one part of the puzzle. Security, observability, and policy flexibility are also important considerations. The decision to adopt Cilium over AWS CNI (or vice versa) should align with your architecture goals.

At IMESH, we help teams build the right mix of performance and security for their Kubernetes networking. Want to test your CNI stack or optimize Istio?